While the holiday season is months away, we're excited to celebrate "Christmas in July" by revisiting a ChristmasGAN project initiated by Hasty, now a CloudFactory company.

In this article, we'll present our approach, results, and findings, providing an introduction to building Generative Adversarial Networks (GANs) for practical image-to-image translation. You'll gain an understanding of how GANs function and take away actionable insights for your own GAN experiments.

We hope to bring some mid-year Christmas cheer with our inspiring results!

What is a Generative Adversarial Network (GAN)?

By now, most of you have heard of GANs which researchers have used for many exciting things like image synthesis, human face generation, and image super-resolution. We'll deliberately avoid technicalities in this post, but here's an overview of GANs if you're newer to the topic:

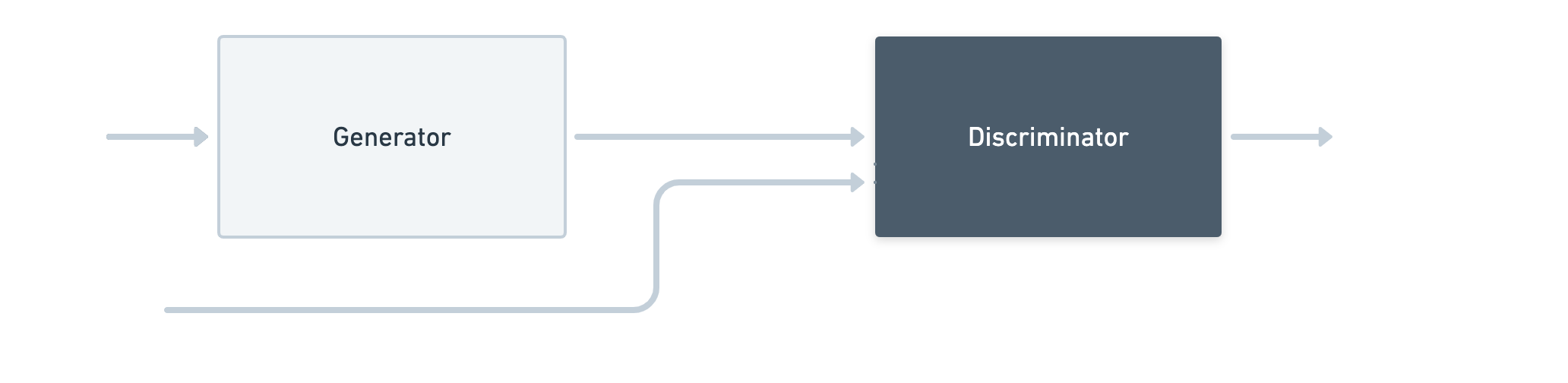

GANs are generative AI models within the framework of deep learning. They consist of two players: the generator and the discriminator.

The generator tries to create realistic samples that the modeler desires, while the discriminator tries to tell the difference between real and generated samples. Through competition and training, the generator learns to produce convincing samples, and the discriminator becomes better at detecting fakes.

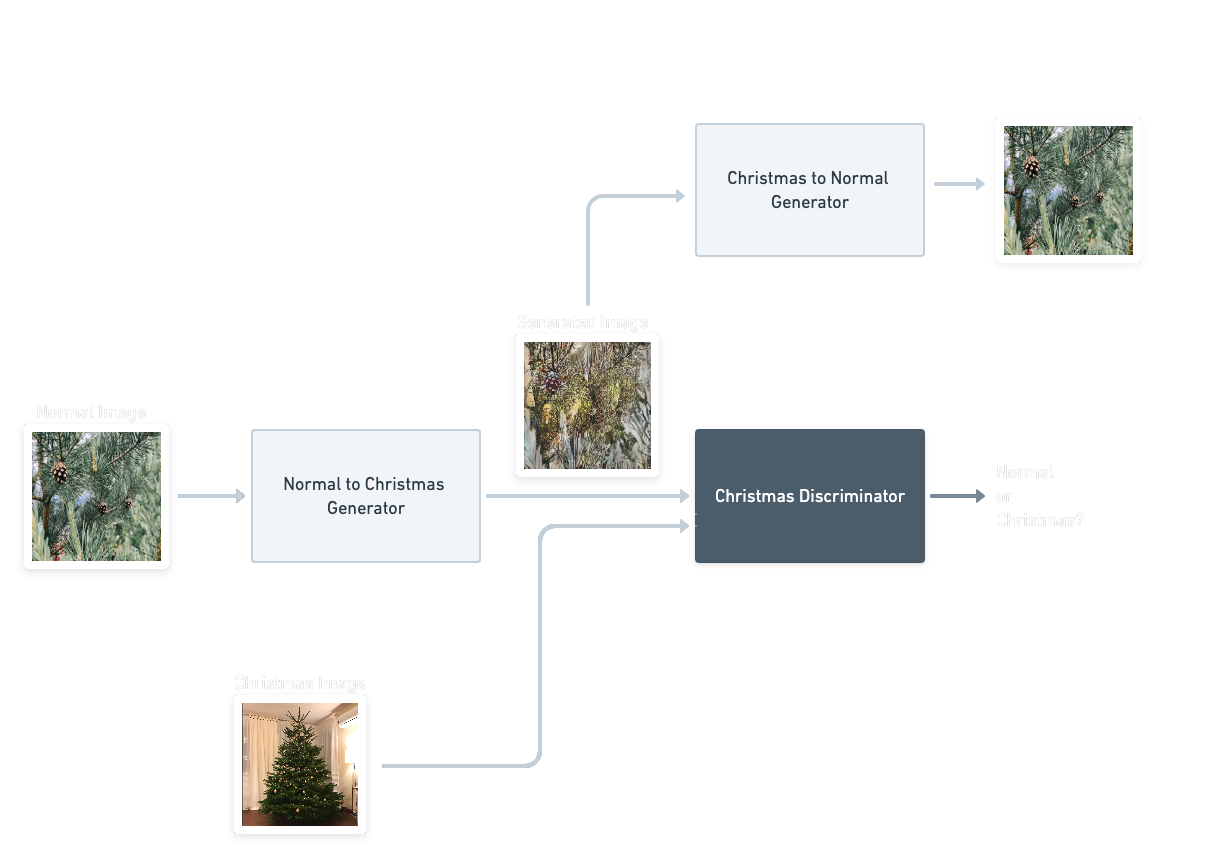

This entire model is represented below:

Figure 1: A basic model of Generative Adversarial Networks (GANs)

What would a ChristmasGAN look like?

Initially, this goal seemed simple and easy to achieve. But we soon realized that describing what makes an image look Christmassy in a way that a GAN can understand is actually difficult. There's a lot of social and situational context surrounding the concept of Christmas, making it challenging to capture and convey in the GAN's training process.

Traditionally, GAN methods that model image-to-image translation do so between domains with a high degree of symmetry or similarity. One example would be the translation from horse to zebra, where the addition or the removal of stripes is enough to do the translation. Another would be from apples to oranges, where color and texture are sufficient to complete the translation.

Figure 2: Horse to zebra, accomplished via adding stripes - image taken from CycleGAN repository.

Figure 3: Apples to oranges, accomplished via color and texture changes - image is taken from CycleGAN repository.

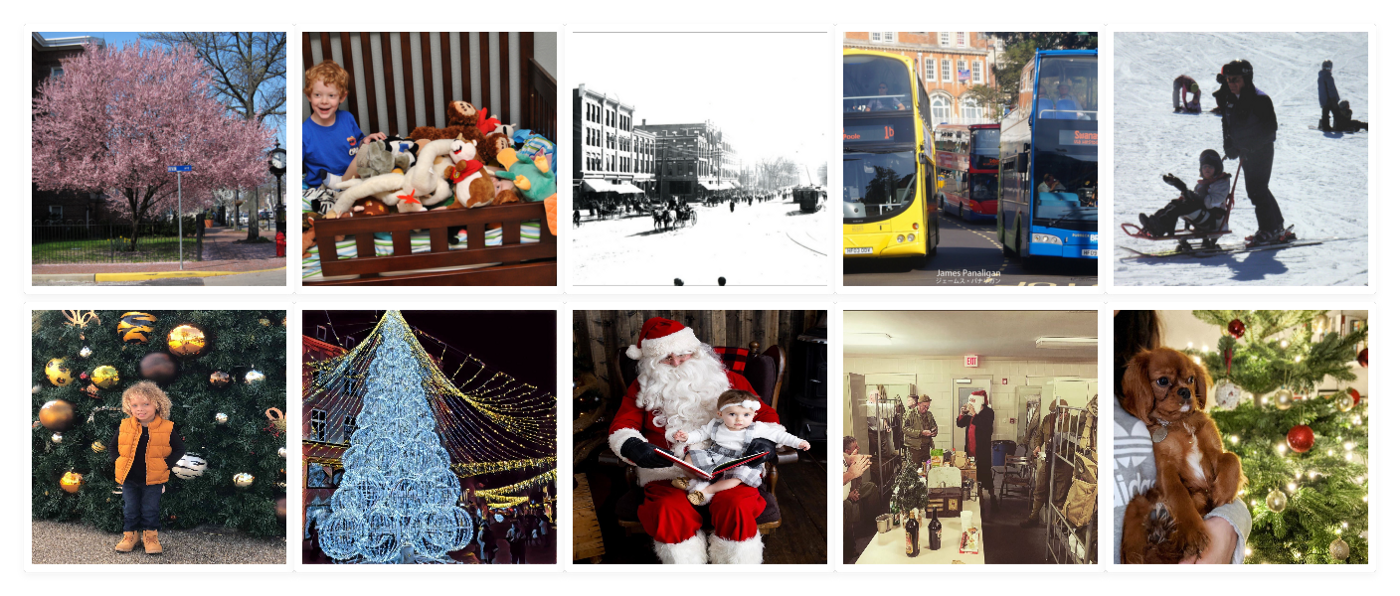

Applying this concept to our specific use case is tricky. We need a model capable of transitioning from "normal" to "Christmas." However, this mapping is more broad compared to other use cases. Almost any object or aspect of life can be associated with Christmas, such as decorating objects, wearing costumes, embellishing houses and markets, or even dressing up pets as reindeer.

Figure 4: Top row: Diversity in normal images. Bottom row: Diversity in Christmas images. The images are from free-to-use websites and the COCO datasets.

Despite the complexities, we still wanted to explore the potential of existing image-to-image translation techniques. Specifically, we hoped to develop a mapping function that can transform regular images into images with a Christmas theme.

Here are the clarifications of our expectations and constraints:

- Image-to-image translation: The model should take an input image from the "no Christmas" domain and generate an output image in the "Christmas" domain.

- Object transfiguration: The transformation of the image should reflect the characteristics of the Christmas season. For example, people in the image should morph into individuals wearing Santa hats, beards, or red costumes.

- Identity preservation: Despite the transformation, the people who turn into Santa should still be recognizable. The generated image should retain some information from the original rather than just swapping it out for a completely different Christmassy image.

- Recognition of the original scene: If the image depicts a market, the transformation should give it a Christmas theme while still maintaining the recognition of the original market.

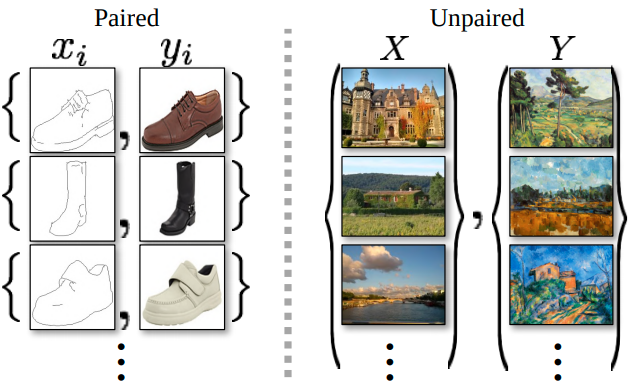

- No publicly available dataset: Currently, no existing dataset is specifically tailored for this problem. Therefore, we must collect our own dataset to train the model.

- Gathering paired images is difficult as it's challenging to find or capture pictures of the same subjects in both their normal and Christmas-themed states. The constraint applies to various subjects in our dataset, including people, Christmas trees, and markets.

Figure 5: Paired vs. unpaired data. The image is taken from the CycleGAN paper.

Modeling the ChristmasGAN problem

Keeping in mind the goals and constraints of our problem, we thought that the only viable option to model a ChristmasGAN is ‘unpaired image-to-image translation.’ Unpaired refers simply to having images in both domains (Christmas and not Christmas) without any explicit one-to-one mapping between them.

We mention two methods among many that we tried out, as they seemed to work better on our data set (we excluded the rest for brevity):

They both more or less follow the same basic idea, which is to learn a mapping between the two domains in the absence of paired images.

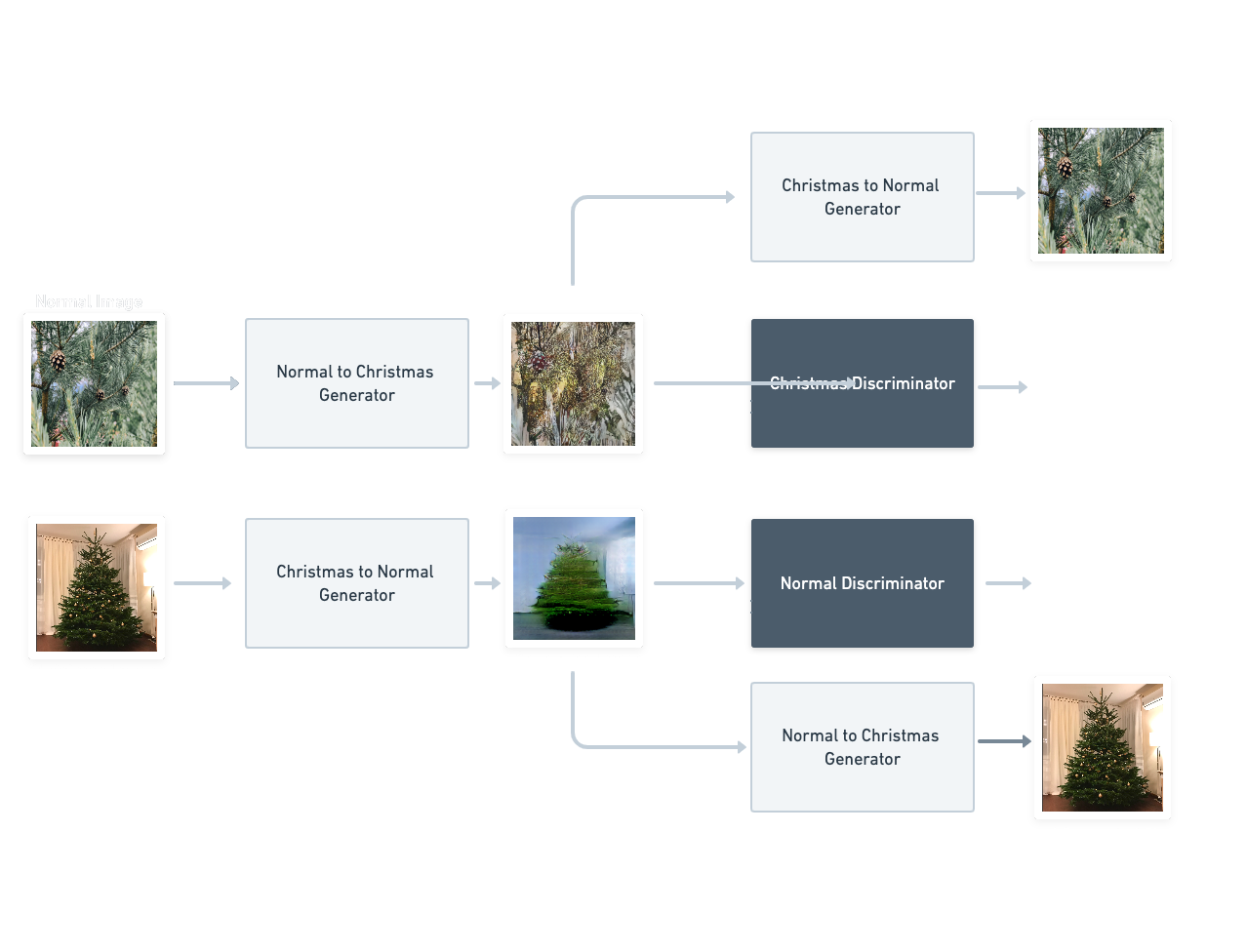

Figure 6: Redrawing of the full structure of the DiscoGAN.

Figure 7: Redrawing of half the structure of the CycleGAN. The figure shows the forward cycle of the CycleGAN; swap “normal” with “Christmas” along with the images to get the backward cycle. Alternatively, you can look at the paper for the full scheme.

In our case, we have two domains: 'A' representing normal images and 'B' representing Christmas images. Each domain has two generators and two discriminators. The concept is to transform images from domain A to domain B using one generator, and then revert them back from domain B to domain A using the second generator. The model is penalized for distorting the identity of the original normal image when returning to domain A.

You can refer to figures 6 and 7 for details.

DiscoGAN accomplishes this via a reconstruction loss, while CycleGAN accomplishes this via what they call a “cycle consistency loss.”

Data collection posed the most significant challenge in this project. Unlike traditional style transfers or object transformations, our task was more complex. The images were unpaired, lacking direct correspondences, and a singular property mapping normal images to Christmas. Our objective was to transfer the essence of "Christmasiness" without extensive supervision. To gather the diverse and unconstrained data needed for our project, we enlisted the help of our teammates. We requested them to contribute a few hundred images representing Christmassy and non-Christmassy scenes while maintaining the overall theme (such as pictures of people, markets, trees, and decorations).

We wanted to introduce variety into the data, so we encouraged our team members to search for interesting and culturally diverse elements related to Christmas, ranging from "Julafton'' to "Weihnachten" to "Blue Santa." This approach could have been a recipe for disaster, as it made the problem even more challenging by introducing cultural and regional connotations associated with the concept of Christmas.

The data collection process was crucial yet complex, as it required us to navigate the intricacies of capturing the diverse essence of Christmas while accounting for its cultural variations.

After completing the data collection, we dedicated time to removing poor-quality images. One technique we utilized was running a pre-trained instance segmentation network on the images. We kept the images that contained people or trees, while discarding the others.

Then we added images of markets in both domains, which we filtered and selected manually. We augmented the non-Christmas domain by adding images from the COCO dataset to minimize data collection effort.

Web browser extensions that download all images from a webpage and scripts that use selenium to search for and download images automatically came in handy. We took special care to download only free-to-use images.

We had a dataset with about 6,000 image pairs for ‘Christmas’ and ‘not Christmas’ after sorting and filtering. Now came the time to train the GAN to learn this mapping.

First try — Start small

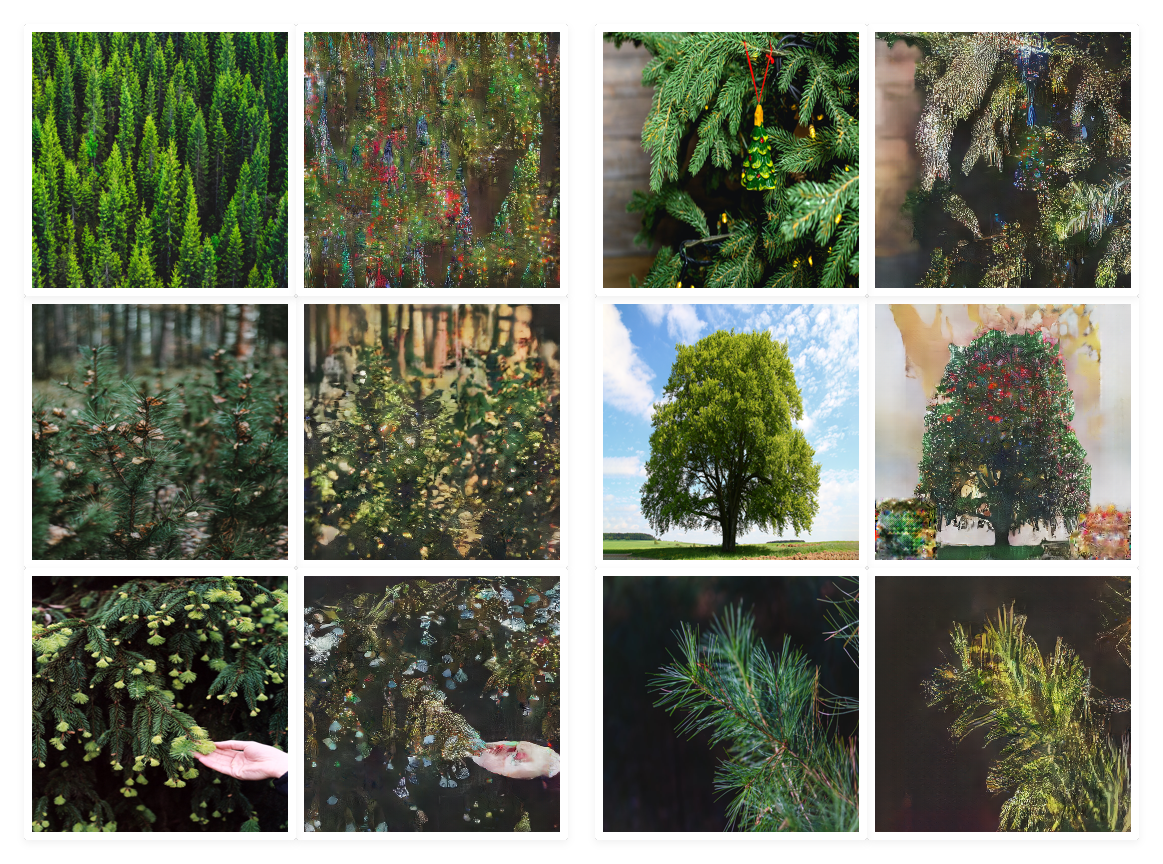

We began with a small-scale approach by training a CycleGAN model specifically on images of trees. We aimed to create a "tree to Christmas tree" generator using a GAN. Here are some results:

Figure 8: Trees to Christmas trees GAN. Six pairs of images. Left in each pair is the original image, and on the right is the Christmasfied version given by the generator. All result images will follow this order.

The image shows six pairs of images. In each pair, the left is the normal image given to the generator. Right is the ‘Christmasfied’ version given by the generator. The top right pair is an exception since we just wanted to see how the Christmas effect looks on an already Christmassy image.

It was encouraging, especially because this ‘tree only’ subset of the entire data collection was only 190 images.

Second try — Go big

We then tried to get this Christmasification to work on more types of images (not just trees) by using the full dataset. Here, CycleGAN didn’t seem to achieve any transformation except for brightening up the shade of red.

We believe this is because the cycle loss penalizes large changes needed to add color shifts, Santa hats, beards, and decorations. Indeed weighting the cycle consistency loss lower helped improve the results, but not by much.

We attempted the experiment again, but this time with DiscoGAN. DiscoGAN training seemed not to converge at the start as we had problems with mode collapse.

By now, we figured, since the concept being learned is too abstract, we might as well “weakly pair” the images.

How did we weakly pair images?

By weakly pairing the images, we mean that for any image in the ‘normal’ domain containing a person, the image sampled from the ‘Christmas’ domain also contains a person. If A has a tree, then B has a tree too. We can think of this as making the explicit assumption that there are individual mapping functions that the network can learn for one category. We limited ourselves to three sampling categories: People, trees, and markets, as they were the most dominant in our dataset.

By doing this, we assume that there are individual mapping functions for ‘person to Santa,’ for ‘tree to Christmas tree’ etc. This may not be strictly true for neural networks in general. But we thought since we sometimes used instance normalization layers with single image batches, this would improve gradient flow as the feature similarity between image pairs is higher.

The motivation was to decouple these assumed mappings to make it easier to train the GAN. This can also be thought of as adding label information to the GAN training, which generally improves performance. This approach proved effective, as DiscoGAN began converging and produced intriguing results.

Results of our ChristmasGAN project

First, let’s use the DiscoGAN trained on all the data but only on the images of trees we saw before and compare how the learned mapping is different:

Figure 9: Results from Normal to Christmas generator.

As you can see, the Christmas effect has been enhanced compared to the results from above.

What's interesting is the addition of snow in the bottom left image. In all of these, the effect seems similar to style transfer, with the addition of many small Christmas lights. It’s almost glittery.

The middle row right shows some artifacts. It has been proposed that they can be fixed by removing batchnorm layers from the generator’s final layers. We didn’t test this hypothesis, but this could be one area to improve the model.

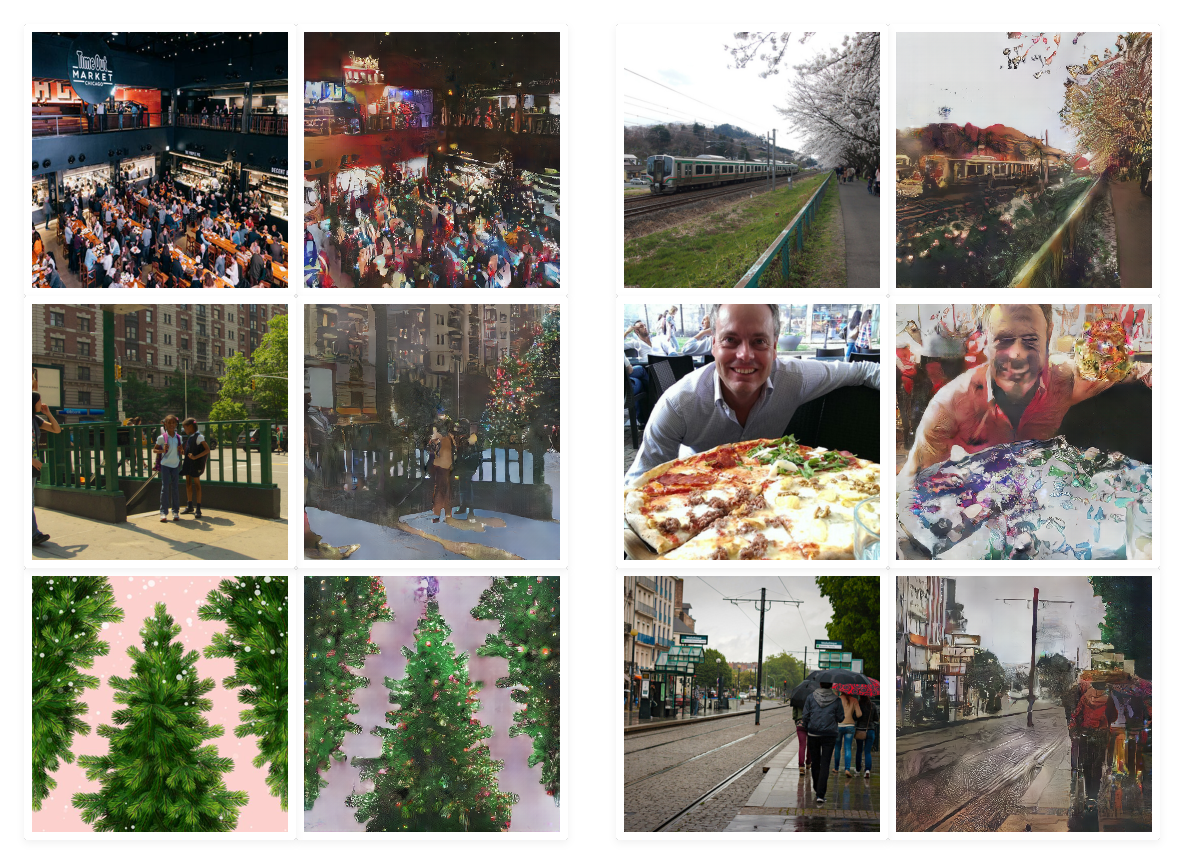

Now let’s see what happens on a random assortment of ‘normal’ images containing many different things:

Figure 10: More Christmas

The model loves to add the color red, warmer tones, and lights everywhere, even on pizzas. It’s interesting to see how Christmas is hallucinated on things typically not decorated during Christmas, like food.

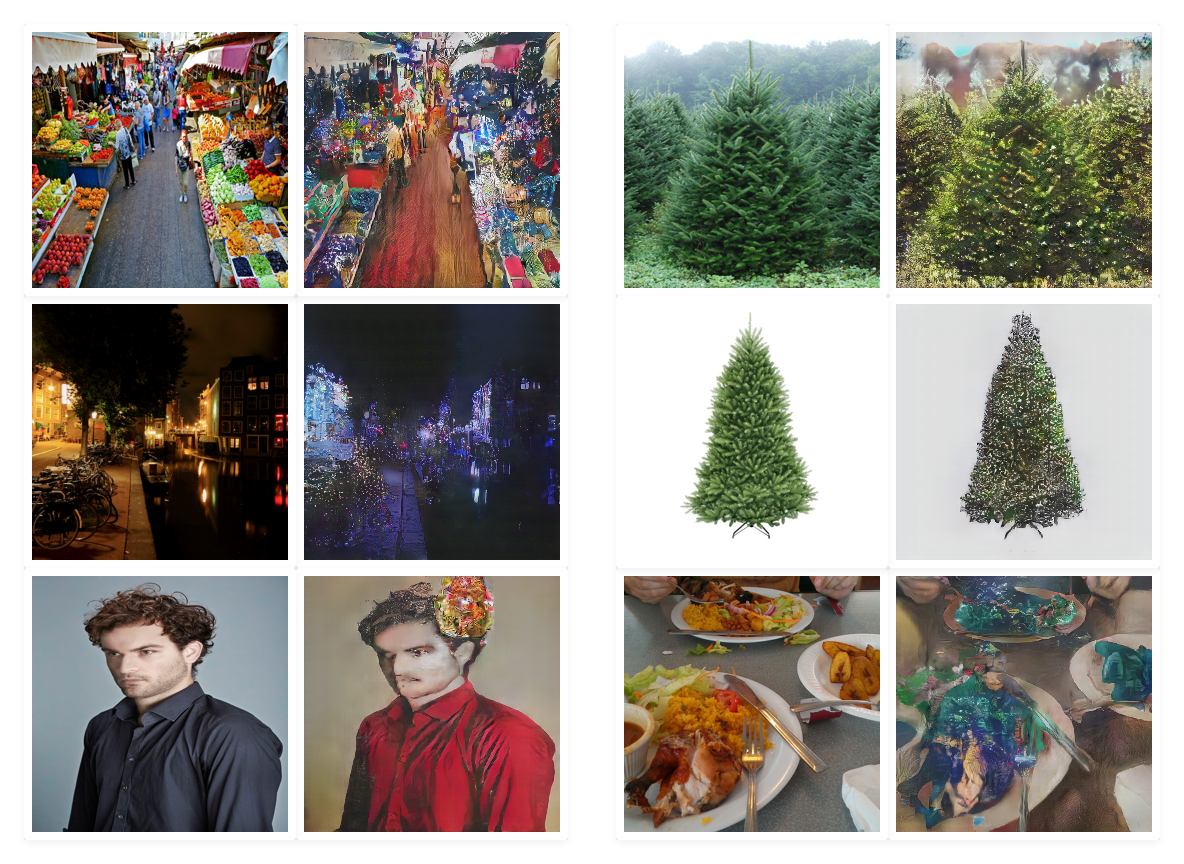

Figure 11: More Christmas!

Markets are believably Christmasified. Yep, nothing wrong here; let’s keep moving.

Figure 12: Even more Christmas

The trees are very nicely Christmasified, though the food is not. This is forgivable, as food should not have lights on it anyway. The person in the bottom left is interesting. It appears there was an attempt to hallucinate a Santa hat, but it wasn’t realistic enough. Or it could just be a nicely placed artifact.

Figure 13: Even more, more Christmas

It fares alright here, even when dealing with trains. The bottom right result is also great, as there is now snow. We wouldn’t recommend eating the pizza, though. In general, it doesn’t seem to fare too well on human faces; they are distorted to a very high degree.

But wait, there's a GrinchGan too!

You might know what’s coming here. If you look carefully at the diagrams for DiscoGAN and CycleGAN you’ll notice that there is also a “Christmas to normal” generator. Indeed, as a byproduct of how we model this image translation problem, we get what we would like to dub: GrinchGAN.

So for those of you for whom Christmas cheer is misery and your sheer dislike of the holiday season and joy rivals that of the Grinch, here are some Christmassy images, with the joy removed by our GrinchGAN:

Figure 14: Grinch GAN removing joy and warmth from the world.

The mapping Grinch GAN has learned is colder hues, bluer colors, distort all cheer, and dial down the lights. To us, it has a nuclear winter vibe. But maybe that's Grinch’s intention.

Conclusion and learnings from our ChristmasGAN project

This experiment was an exciting side-project providing us insights into how GANs work. Through minimal effort, we were able to achieve a decent Christmas effect.

The main takeaway is that training a GAN to translate images based on the abstract concept of Christmas takes work. The loose constraints, unpaired data, and lack of supervision make it challenging.

Data quality and training formulation play a crucial role in these scenarios. Selecting a tool like Accelerated Annotation will seamlessly combine automation and human expertise to efficiently provide high-quality data labeling at scale.

There are a few other things we could've tried which may have improved the results of our ChristmasGAN:

- With better tuning of the dataset, the results could have been significantly improved. Part of the difficulty is in gathering images from the two domains. Christmas images are almost always in great shape. The lighting is excellent; people are dressed and posing for them, and the camera work is usually professional.

In contrast, everyday images are not like this. It’s hard to gather such images with a high degree of similarity, unlike horses and zebras. Just try searching for images of “coniferous trees” versus “Christmas trees” to see the stark difference in how many images you end up with. Not many people care about taking pictures of conifers before they are decorated. - We should've tried balancing the losses on more recent image-to-image models. It takes a long time to see the GAN start to produce meaningful images. We had to wait, on average, two days before concluding one experiment. This meant we had to cut our losses early and couldn’t iterate too much on the dataset, models, and hyperparameters.

- We could've tried the weak pairing strategy on other models. But sadly, we had eliminated them too early.

GANs have significantly advanced the field of generative AI, and their ability to create realistic and diverse data has numerous practical applications. Whether joyful or grouchy, we hope our Christmas or GrinchGAN could bring you some joy or practical insights during the warm summer months.

This blog was originally published by Hasty.ai on December 22, 2020 by Hasnain Raza. Hasty.ai became a CloudFactory company in September 2022.

Training Data Computer Vision AI & Machine Learning Data Annotation