This post is the second in a five-part series that outlines five applications of AI in the drone delivery ecosystem. The first post, Drone Delivery and AI: Object Detection and Collision Avoidance, discusses how computer vision helps drones understand their surroundings, so they avoid collisions with buildings, trees, and other obstacles. Because the FAA is allowing more and more drones to operate beyond the visual line of sight (BVLOS), the need for drones to detect and avoid collisions autonomously is becoming critical.

Drone connectivity equals safety, scalability, and productivity

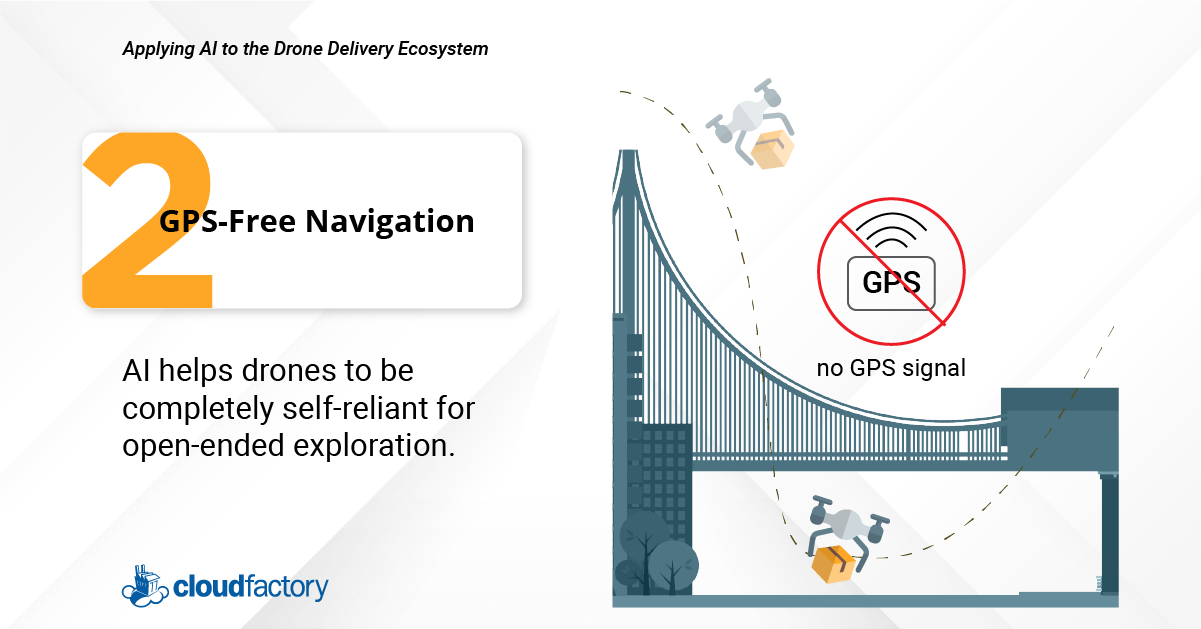

What happens when delivery drones can't connect with satellites when delivering pizza to specific addresses in densely populated cities?

If drones can't connect with satellites, they will do one of four things: return home, hover in place, land on the spot, or malfunction.

These outcomes can reduce productivity, endanger public safety, and prevent drone delivery at scale.

Before drones can go from small-scale deliveries within rural and suburban communities to large-scale deliveries within an uncrewed traffic management system (UTM) serving large metropolitan areas, they'll need to maneuver challenging urban environments where GPS signals are unreliable.

Repeatedly navigating urban canyons—areas that restrict the view of the sky and reduce the number of satellites a drone can connect with—is a challenge many drone teams are tackling by incorporating GPS-free navigation technologies.

These technologies use computer vision, AI, and other automation techniques to keep drones airborne and safely headed to their destination.

Why is GPS-free navigation necessary to scale drone delivery?

For drone delivery at scale to succeed, drones will need to serve cities, too. Today, drone delivery over populated areas is restricted in most cities worldwide.

It's just too risky.

But with GPS-free technologies, drone deliveries will expand from the suburbs of Virginia to the streets of Washington DC.

As public acceptance of and consumer demand for drone delivery increases, organizations are responding by investing in drone technologies that address the loss of GPS signals during flight.

What role does AI play in GPS-free navigation?

To train drones to make a sequence of decisions to achieve goals in uncertain and complex environments requires a combination of machine learning, deep neural networks, and reinforcement learning.

Computer vision and sensors enable a drone to explore complex environments, including urban obstacles, such as the areas around skyscrapers and bridges.

AI enables a drone to continue navigating a route by using visual sensors and an inertial measurement unit. After an operator sets the flight plan, a drone can find the best way to reach its destination safely.

Near Earth Autonomy provides simultaneous localization and mapping technology to keep drones on their flight path. The technology that attaches to the drone builds a map during flight, and while it does this, the technology is also saying, "this drone is located at this exact point on this map even though there is no GPS signal to prove it." It does this by depending on an onboard LiDAR scanner that senses physical surroundings and their distances by measuring how long laser pulses sent in all directions take to bounce back to a sensor.

Meanwhile, inertial sensors record the drone's movements, assisted by a camera for visual tracking.

NASA is using Near Earth Autonomy for missions over the North and South Poles, where GPS signals can’t reach. This same technology could eventually enable autonomous urban transport and delivery capabilities.

Labeling LiDAR Data

As your drone team expands its delivery operations, you’ll likely shift from relying on GPS navigation to using LiDAR data, which adds its own set of challenges.

For LiDAR data to be helpful, it must be accurately labeled, which is a big job that's hard to scale. The challenge for AI developers is to transform massive, raw sets of LiDAR data into similarly large sets of structured data to train machine learning models.

To complete this work, we recommend partnering with a managed workforce specializing in labeling LiDAR data. Such a workforce will have the technology to handle large datasets and the skills to label 3-D point cloud data. Interpreting these point clouds is a skill that takes quite a bit of time and interest to develop. Humans must meticulously correlate the points within an environment and identify the exact points on the object that needs labeling.

At CloudFactory, we believe that annotating LiDAR data for drone delivery takes more than technical skill. It also takes an understanding of the drone industry and its unique data needs. If your organization works with LiDAR technology and needs help with labeling, our workforce is ready to deliver high-quality LiDAR data to your project team.

Are you racing to combine onboard AI, computer vision, and machine learning with your drones’ exploratory and transportation abilities? Check out the Essential Guide to Applying AI to the Drone Delivery Ecosystem, a comprehensive resource detailing the five applications of AI, the importance of gathering and preparing high-quality data, and annotation techniques for drone data.