Vision is a sense so natural to many of us that it's easy to take for granted how complex it is. Our eyes are not just cameras taking pictures of the world: We rely on an innate ability to interpolate missing pieces, distinguish people and objects, discern distinct items, determine distances, and detect motion.

These abilities work in tandem with our knowledge and learned experience to both interpret what we are seeing and determine appropriate reactions to an ever-changing and unpredictable environment. Similarly, for an autonomous car to understand and use the visual information it gathers effectively, its visual system must consist of more than just cameras.

The Human Vision System

Much of what we know about neuroscience comes from studying damaged brains. For example, Akinetopsia, or motion blindness, is a disorder that makes it difficult for a person to perceive motion, even though they can see stationary objects just fine. The problem lies not with the primary visual cortex itself, but with other specialized portions of the brain that process visual stimuli.

Another disorder, primary visual agnosia, occurs when a person is unable to recognize or distinguish objects or faces. Again, their eyes may perform just fine – all of the input is received without issue – but there is a defect in the part of their brain that processes this information and determines which pieces of the visual input distinguish different objects.

There is also cognitive power devoted to determining appropriate actions and reactions to stimuli. If someone tosses you a ball, you make use of your vision system to see the ball, distinguish that it is a ball, analyze its motion, and move your arms and hands in a way that allows you to catch it.

Similarly, if you are crossing the street and a car is coming toward you without slowing down, you are able to react and move out of the way. This is something you would not be able to accomplish if any part of the system wasn’t working properly. Lack of vision, lack of object distinction, lack of motion detection, or lack of reaction can all contribute to a fatal error. And so it is with the vision systems in autonomous cars.

Visual Data Collection for Autonomous Cars

Computer vision for autonomous cars begins with the collection of visual data - and a lot of it. When it comes to training an autonomous vehicle to see, the more data there is to use, the better. The cars that collect data for this use case typically use a combination of remote technologies, including:

- Cameras, which can record 2-D and 3-D visual images, allowing for the identification of objects. Multiple cameras make use of parallax to determine distances as well.

- Radar, which makes use of long-wavelength radio waves. A signal is emitted, and by measuring the reflected signal, motion can be detected via the Doppler effect, and distance to the object can be measured. Radar works well in low-visibility conditions because radio waves are able to penetrate fog and rain. However, radar generally provides poor-resolution images.

- LiDAR, which stands for Light Detection and Ranging, is similar to radar in that it involves emitting a signal and detecting the reflection but it makes use of much higher frequency laser light. This higher frequency allows for much better resolution, although it comes at the cost of functionality in conditions of low visibility.

Systems are typically located in several places on the car, with overlapping visual fields to provide a more complete understanding of the targeted area. This combination of diversity and redundancy helps eliminate errors that might be caused by a defect in any single device, including simple problems, such as a dirty camera lens.

In total, an autonomous car must have a continuous stream of visual input that includes all 360 degrees surrounding it. Moreover, it can gather data imperceptible to the human eye with the use of more specialized cameras, combined with radar and LiDAR systems.

Data Cleaning and Annotation

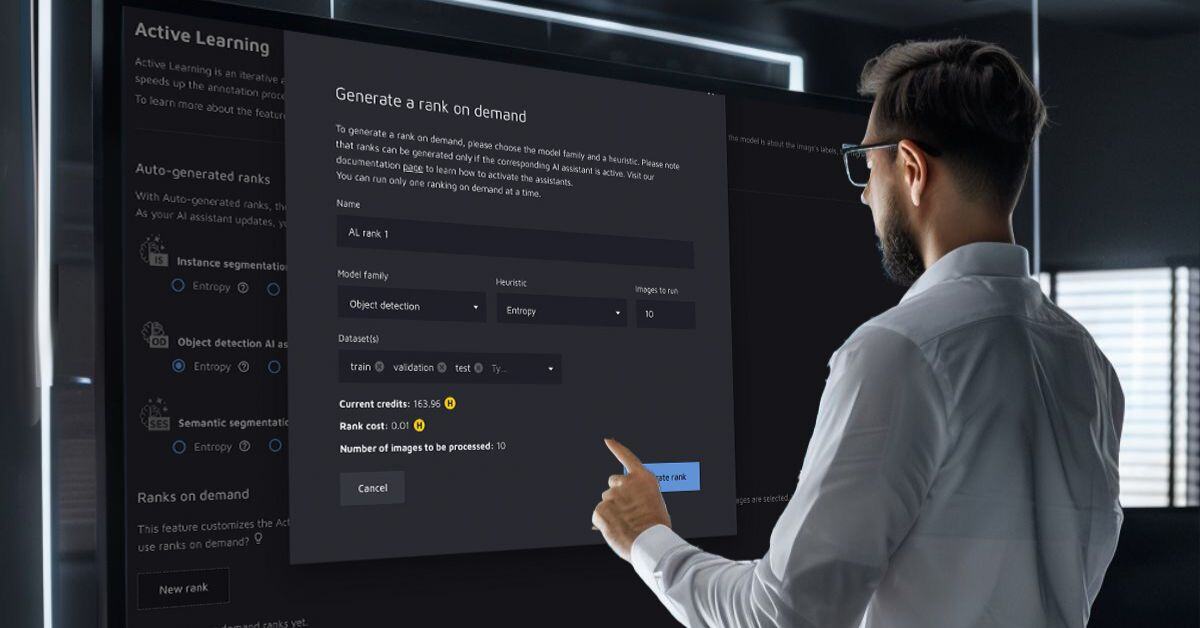

To teach an autonomous vehicle to see, people must process and prepare the data collected from these sources and structure it for use in training an autonomous system to interpret and understand the visual world that is reflected in the data.

This is the most time consuming part of this challenge - annotating images to train and maintain the computer vision system. The annotations call out the objects in the images that the computer vision system must understand and react to when, ultimately, the system must take some action in that environment. Labeling the data prepares it for use in machine learning.

Feeding this data into a training algorithm makes it possible to teach the autonomous car’s vision system to make these distinctions on its own. Basically, by feeding it labeled images over and over again, it can recognize patterns associated with the labels and determine labels of future objects on its own.

To learn more about data annotation for this use case, read about how Luminar Technologies uses sensor data to increase the sight range for autonomous vehicles.

Ideally, the car is now at the point where it can determine if it is seeing a pedestrian, a truck, a sign, or an object in the road. Based on its training and adjustments made during the full AI lifecycle, the computer vision system has the information it needs to make decisions and react based on the visual data it will consume in real time.

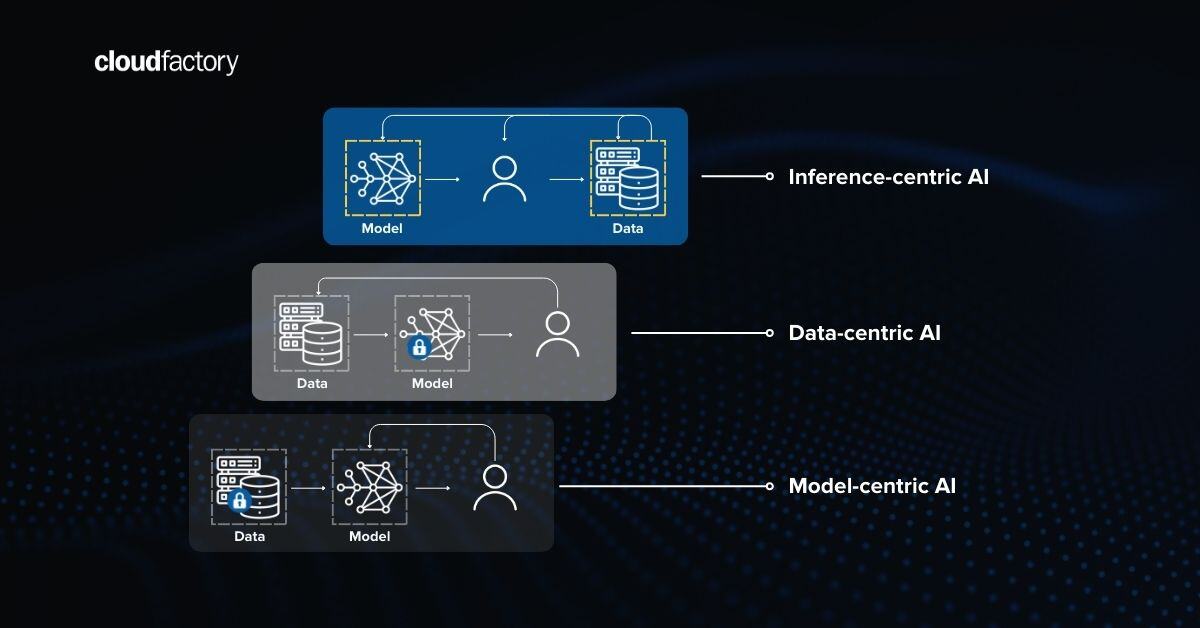

Machine Learning for Computer Vision

If we lived in a simulated world, careful programming alone might be enough to prepare an autonomous vehicle to hit the road. However, cars operate in an ever-changing environment, and no two drives from point A to point B will ever be identical. Weather conditions change, unexpected obstacles appear, and traffic varies.

Enter machine learning and deep learning algorithms. Just as these algorithms can take the annotated visual data and use it to interpret and understand the environment, they can be used to learn other patterns, allowing for the development of obstacle avoidance, predictive modeling, and path planning.

Challenges remain, however, in subtler interactions. While a human driver can make eye contact with a pedestrian and engage in communication using body language, an autonomous system does not yet have this ability.

At the current rate of technological advancement, fully autonomous vehicles are here and their proliferation is not that far off. Critical to this development are the teams involved in the data collection, data preparation, and development of the machine learning learning algorithms that train these systems to see.

Trained Teams for Image Annotation for Computer Vision

At CloudFactory, we provide professionally managed, trained teams that clean and label data with high accuracy using virtually any tool. We’ve worked on hundreds of computer vision projects and our teams work with 10 of the world’s top autonomous vehicle companies.

We work with organizations across industries and process millions of tasks a day for innovators using computer vision to build everything from self-driving cars and cashierless checkout to precision farming and cancer detection algorithms. We’re also on a mission to create meaningful work for talented people in developing nations.

To learn about how CloudFactory can scale high-quality labeled data for computer vision, contact us.

Computer Vision Image Annotation AI & Machine Learning Automotive