In our first article in this series, we discussed three common mistakes modelers make when they are designing and deploying automation and machine learning (ML) models. The quest for the perfect model on the first try often results in delays and frustration.

CloudFactory’s LinkedIn Live guest James Taylor calls these overly complex attempts “Big Bang” projects and they are to be avoided. Instead, Taylor urges companies to use an incremental approach to designing models:

“The best approach is to start with a specific business problem, decide what a “better” business outcome would look like, and then work backwards to determine what technologies and approaches are needed in order to get there.”

So, how should you go about it? The trick is to figure out which technology or approach is best to handle each discrete micro decision — these are the small decisions that organizations make every day about some aspect of their day-to-day workflow.

For example, should an insurance claim be investigated, should a loan be awarded, should a customer receive a discount, should the equipment receive unscheduled maintenance?

Sometimes machine learning or automation is the best option but other times it is best to utilize humans in the loop (HITL). Often, the best approach is to have a combination of the two, with a human-and-machine system that involves multiple approaches. These need not be all-or-nothing decisions.

5 Ways to Handle Each Micro Decision

High-performing automation and ML combine people and technology strategically to achieve the best outcomes. It is neither necessary, nor effective, to treat the entire problem as an ML problem. In a LinkedIn Live session CloudFactory hosted last week, James Taylor described how he discusses this with his clients who are implementing a decision management solution.

“We play this game, we call it the ‘if only game’. This is how you decide today. Fill in the blanks in the following sentence: If only I knew blank, I would decide differently.”

The overall process remains one largely driven by the combination of business rules and humans, but with the “if only” predictions provided by the ML model added to the mix. The value comes from combining them effectively.

When you are designing and deploying automation, you have five options for processing each micro-decision associated with a task. Each option has pros and cons, so it’s important to consider how your choice will affect your outcomes:

- Let the model and the automation process the case without human intervention or expertise.

- Route it to an existing manual system in-house.

- Direct it to an external workforce.

- Alert the end user, who might be a customer, to address it.

- Establish a default, and avoid additional action.

So how do you choose? Generally, when the model is certain, with a high confidence score, let the model make the decision. When the model is unsure, your best choice depends on whether the decision comes with high or low stakes. For low-stake decisions, such as coupon redemption, a default outcome is likely sufficient. When the stakes are high, as with the awarding of a mortgage, and the model is unsure, involving a human in the loop is generally the most cost-effective.

In our LinkedIn Live session on business rules and machine learning, Taylor explained how a human in the loop brings maximum value. Some of his clients have suggested that they want to insert a human at the very end of the process to make the final decision, to essentially override what the entire system has recommended. He cautions against this approach.

Letting [the subject matter expert] override it means that there's no guarantee that he's taken account of all the things that the model would take account of. So it was much more effective to have his judgment be an input to [the model].

This insight is critical for two reasons:

- The human in the loop should be there to fix, modify, provide, or clarify the inputs to the model — not to override the model.

- These kinds of tasks require knowledge, training, and care but not the level of expertise that would only very rarely require a surgeon, an engineer, or an in-house subject matter expert.

Applying People Strategically for the “Squishy Middle” and Data Enrichment

Modelers often obsess over model accuracy and feel they must achieve a particular level of accuracy before they can begin to consider how to put the model to use in their organization. This causes deployment to be delayed and is unproductive. Often, incrementally better is sufficient to bring value to the organization.

Tom Khabaza puts it this way in his eighth law of data mining, the Value Law:

The value of data mining results is not determined by the accuracy or stability of predictive models...The value of a predictive model is not determined by any technical measure. Data miners should not focus on predictive accuracy, model stability, or any other technical metric for predictive models at the expense of business insight and business fit.

There are always four possible outcomes when you build a predictive model, and they directly impact your ROI, as you can measure the financial impact of each. Overall accuracy isn’t good enough. You should analyze each of these four separately and come up with an overall strategy that combines the model with human processes.

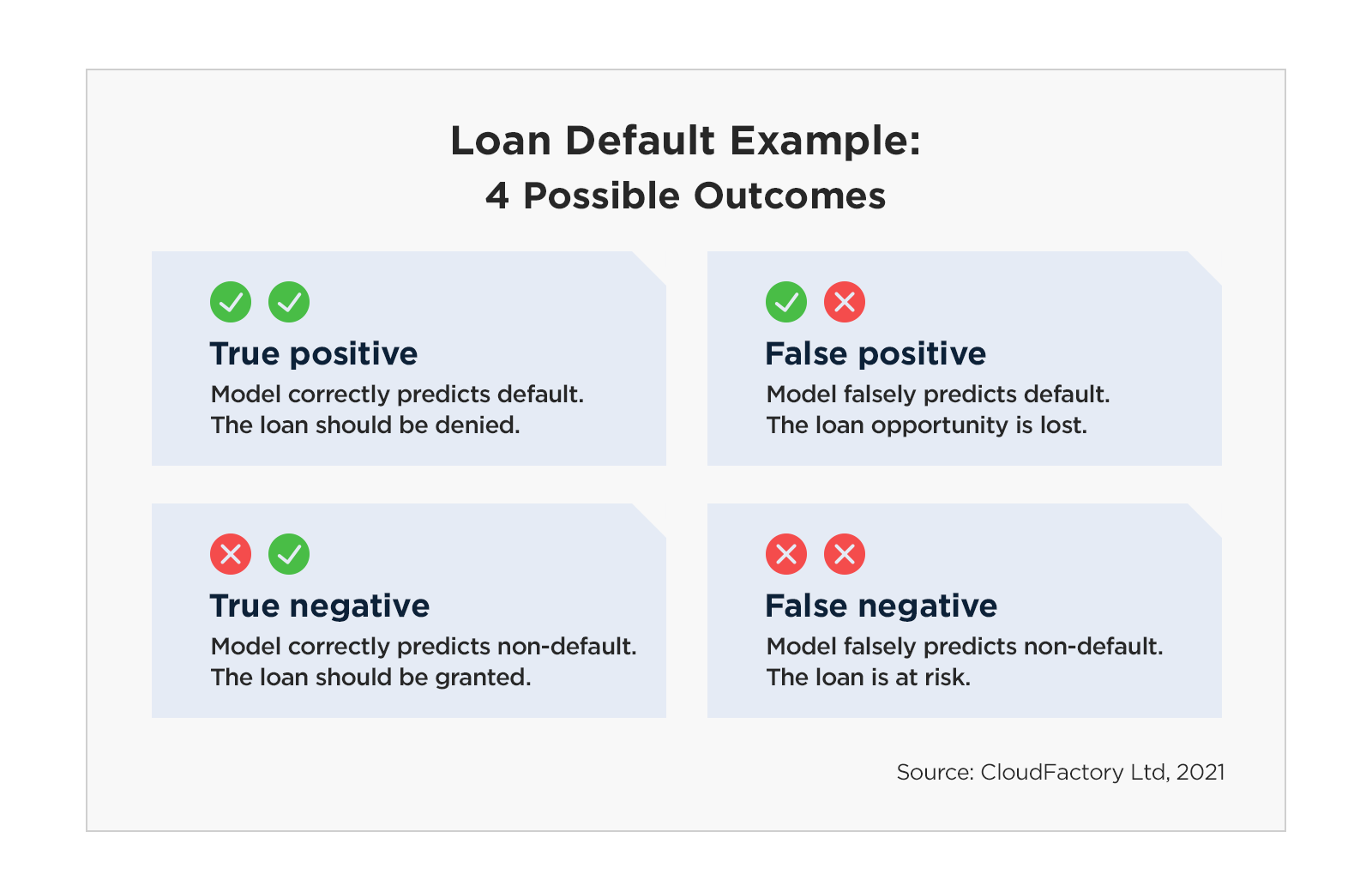

For instance, you might be predicting loan default where there are four possible outcomes: In this example, building a model to predict loan default could result in four possible outcomes.

In this example, building a model to predict loan default could result in four possible outcomes.

Each has its own monetary implications. In this example, false negative arguably has the most serious financial implications, so the human-machine partnership should be designed with that in mind. Clear scores, with either very high or very low values, might get fast tracked with little or no human interaction, but unclear scores (or what CloudFactory’s LinkedIn Live guest Dean Abbott called the “squishy middle,” 26:38 in video) might get routed to a person, like a loan processor.

Alternatively, there might be information that is either missing or requires confirmation that would change a middling score into a clear score. That is where an external workforce can be helpful for data moderation or enrichment. For example, a person might need to confirm that a small business that submitted a loan application actually exists. Someone without banking subject matter expertise can perform such an investigation, provide the confirmation, and the model can rerun the score.

Each of these four possible outcomes will have a different risk-reward profile. An external workforce is most helpful when the risk of misclarification by machine alone is high, and the potential value to the organization is high. You can massively increase your organization’s bottom line when you treat an external workforce as an additional tool in your toolkit. Your best bet is having a managed team on standby that you can use when the risk-reward profile indicates an external workforce will bring ROI.

This is the second article in a three-part series.

3 Examples: Solving Automation and ML Exceptions with Humans in the Loop

In our next series article, you’ll learn how CloudFactory worked with three companies, each of which had a problem that involved data, automation, and/or machine learning. Our managed workforce was able to solve each problem because we worked with them either to develop the necessary training data or to support key micro-decision points:

- A sports analytics platform company needed help when their computer vision model had low confidence when tracking players in game footage.

- A business intelligence company had to solve for edge cases that their machine learning algorithm was not able to handle.

- A retail rebate company had to overcome OCR error rates for transcription in real time.

To learn more about decision making for better ROI on automation and machine learning, check out our LinkedIn Live session on business rules and machine learning with James Taylor, a leading expert in applying and optimizing decision modeling, business rules, and analytic technology.

Data Science Workforce Strategy ML Models AI & Machine Learning Exception Processing