In April, I attended Open Data Science Conference (ODSC) East to engage with data science practitioners, machine learning vendors, and analytics leaders about human in the loop. This year marked the first in-person version of the event since the pandemic, yet the conference was well-attended by an enthusiastic group of data professionals. Some also attended from home, leading to a record hybrid audience of 6,800 attendees.

_East.jpg?width=700&name=Takeaways_from_Open_Data_Science_Conference_(ODSC)_East.jpg)

Day 1 kicked off with virtual keynotes and in-person, hands-on workshops. Days 2 and 3 saw larger crowds and an active expo area. The opening keynote by UC Irvine computer scientist Padhraic Smyth gave me the first of three of my biggest, most surprising takeaways from the conference.

Perhaps you’ll be surprised, too.

Takeaway 1: Without humans, models are less accurate and often overconfident

Smyth opened by discussing model prediction confidence. Virtually all machine learning models, from the latest deep learning networks to the most traditional algorithms, provide a confidence score along with their predictions. Smyth explained that it’s not uncommon for deep learning models to have a 99% confidence score when correct—and a 99% confidence score when incorrect.

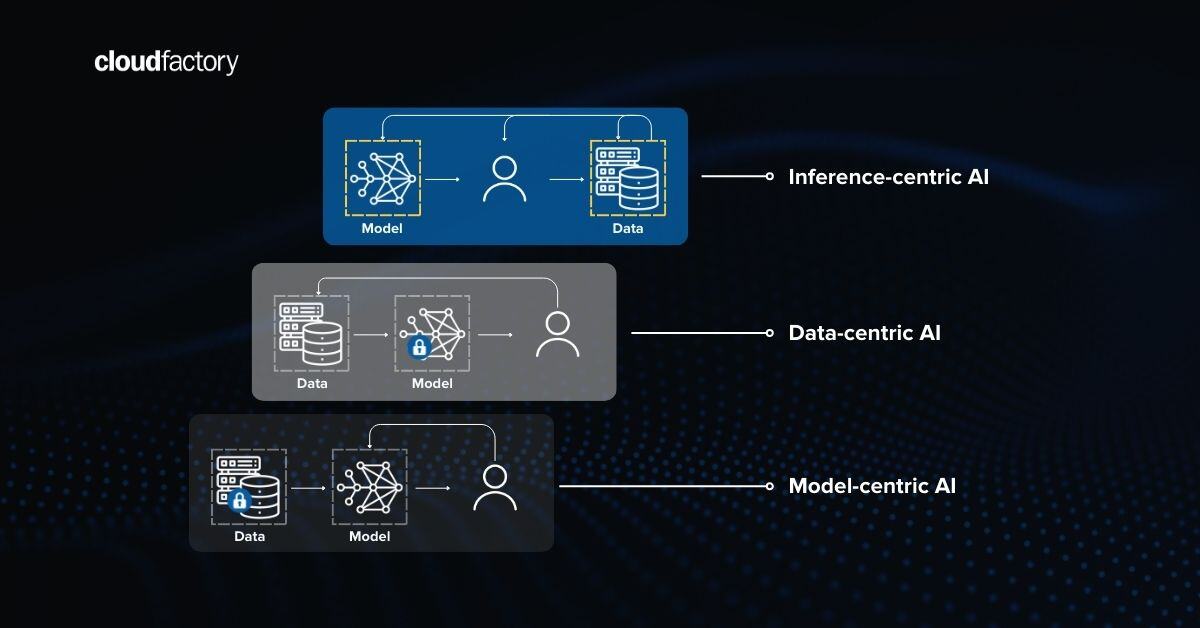

This occurrence is unfortunate because you could use a middling level of confidence to trigger human review, something hard to do with an inflated confidence score. He concluded by comparing three scenarios: Two models in an ensemble, two humans, and one of each. According to Smyth's research, the most accurate was a model paired with a human.

Takeaway 2: Human-in-the-loop project management experience is rare

Before my talk, What Analytics Leaders Should Know About Human in the Loop, I gathered survey data, which revealed that only specialists in emerging areas had direct experience with human in the loop. About half of data scientists weren't familiar with it.

When I spoke with human-in-the-loop author Robert Monarch during a CloudFactory interview, Robert speculated that data scientists in academia often train with famous datasets but arrive in industry lacking hands-on experience managing human-in-the-loop projects.

Although analytics leaders, not practitioners, attended my ODSC talk, that audience seemed to follow the pattern: Only 9% raised their hands when asked if they came into the conference with some knowledge of human in the loop.

Takeaway 3: The data science community is increasingly focused on data during the entire ML lifecycle

Most practicing machine learning modelers would think it evident that you have to focus on the whole machine learning lifecycle. But that hasn't always been true. There's been an obsession with the modeling phase for years. When data annotation and labeling are involved, the obsession focuses only on the training data (as necessary as that is) and assumes that you don’t need human oversight after modeling.

Of course, that’s not true.

Models need monitoring, and they eventually degrade, which is why human-in-the-loop vendors provide ways to track, version, and refresh models.

This thought brings me to my third ODSC takeaway: Data must be monitored as well.

With so many person-hours invested in labeling and annotating data for machine learning models, the training data is as valuable an asset as the model.

The company Pachyderm provides software that applies versioning to data. Another vendor, Metaplane, supports data observability by tracking various indicators, including summary statistics, to monitor changes and indicate when something might be wrong.

What’s fascinating is that being alerted to a problem is not enough; you also need human review and human correction as the next steps. Although technology can provide real-time transparency into data issues, how many early adopters will have a scalable intervention strategy in place and ready to go when monitoring reveals problems?

Many companies have their data scientists and data engineers manually diagnose and correct problems, but that’s not sustainable. Inevitably, these companies will need to address data interventions with external, human-in-the-loop resources.

Three takeaways = three reasons to explore partnering with a managed human-in-the-loop workforce

For more than a decade, CloudFactory has been helping innovative companies do more with data by providing a scalable, managed, human-in-the-loop workforce with expertise in computer vision, data annotation, and data processing.

Were you surprised by Padhraic Smyth's observation that solutions combining humans and models are most accurate? How about my survey results revealing that human-in-the-loop expertise rarely lives in-house or by the idea that monitoring data is as vital as monitoring models? Either way, consider it a prompt to better understand how CloudFactory can help you accelerate your artificial intelligence initiatives and optimize your business operations.