Big Data is all the rage these days.

We all - businesses, government entities, and each of us, in every sphere of our lives are generating “data exhaust” that is being increasingly used to weigh, measure and categorize us. Think about our minute-to-seconds interactions with mobile phones and “All things Internet”, organizations moving their infrastructure to the cloud, the rise of multimedia and social media fueling the exponential growth in the amount of data being captured today -- the result is, Big Data!

It is estimated that by 2014, the amount of data a company handles will double every 1.2 years. While the scale and complexity will continue to increase daily. “If US health care alone were to use big data creatively and effectively to drive efficiency and quality, the sector could create more than $300 billion in value every year.” says a research report by McKinsey Global Institute.

While Big Data is quickly becoming the new competitive advantage, bad data or poor data quality costs US businesses $600 billion annually.

A few weeks ago I ran into an excellent New York Times piece by Steve Lohr about Big Data sizing up and broadening beyond the Internet. In a nutshell, the piece outlines the need to make a shift from computer processing and storage advancement to the fundamentally important question -- How to make better decisions out of all that data?

Data-driven insights, experts say, will fuel a shift in the center of gravity in decision-making. Decisions of all kinds, they say, will increasingly be made on the basis of data and analysis rather than experience and intuition — more science and less gut feel.

Even in the earliest days of Big Data -- when it was synonymous to only pioneering Internet companies like Google, Amazon and Facebook -- I remember lively discussions among data mining experts about the need to engage people and human insights to generate valuable insights from Big Data.

As technology continues to undermine use of traditional labor, the the roles of human insights for better decision-making in Big Data is being replaced by Artificial Intelligence (AI).

Fast forward it to the present day -- we have more than a dozen of “Big Data” companies offering better ways to help enterprises to collect this data, process it, analyze it and visualize it.

Splunk helps enterprises to monitor, analyze and visualize machine data. Think customer clickstreams and transactions to network activity and call records.

Quid funnels information from patent applications, research papers, news articles, funding, and others, to create interactive visual maps of current happenings in technology sectors.

DataSift platform allows organizations to improve their understanding and use of Social Media by filtering deductive social data.

Cloudera is the market leader in Apache Hadoop software - the most powerful technology today in Big data storage and processing - both structured and unstructured.

Palantir founded by PayPal alumni and Stanford computer scientists in 2004, they offer software applications for integrating, visualizing and analyzing the world's information.

Strikeiron is the first to deliver data-driven API solutions in the cloud and offers a broad range of products that includes email verification, address verification, reverse phone and address append.

GoodData offers SaaS business intelligence and custom reporting to help companies monetize their Big Data.

The new power accessible via these “Big Data” companies (greater speed and better throughput etc.) is tantalizing -- but the question is, who is solving the “bad data” problem?

Certainly tools taken from the steadily evolving world of AI, like machine learning automatically discover and surface poor data quality. But for businesses where data is absolutely mission critical -- and as the patterns that drive business decisions are increasingly being discovered by machines -- how can we be assured that these non-human judges are emitting valuable insights and not noisy mediocrity?

Either way, if we want to make better decisions out of Big Data, this signal to noise problem badly needs to be solved.

What is left to do in Big Data processing?

Sixty years ago, around the same time when artificial intelligence was born, J. C. R. Licklider, a computer and Internet pioneer proposed an alternative to AI -- Man-Computer Symbiosis, better known as Intelligence Augmentation (IA). Licklider envisioned that tightly-coupled human brains and computing machines, and that the resulting partnership will think as no human brain has ever thought and process data in a way not approached by the information-handling machines we know today.

Licklider's vision of “man-computer symbiosis” proved many of the pure AI systems envisioned at the time by over-optimistic computer scientists as unnecessary -- marking the genesis of ideas about computer networks which later blossomed into the Internet.

[side note: Shyam Sankar, a Data mining innovator also reiterate this early vision of Licklider in his 2012 TED talk - The Rise of Human Computer Cooperation]

As the other essential technology [beyond computer processing and storage] required in Big Data processing is a “clever software” to make better decision of all the data, this insight -- humans and machines working together as partners not adversaries -- feels more valid than ever. The only part of the Big Data processing that AI will never replace is the intuitive ability of a human mind.

To cut to the point, there will always be a "last mile" problem and opportunity -- In Big Data processing, it is not about humans versus machines or finding the right algorithm -- but it is about the right symbiotic relationship between humans and machines.

------------------------------------------------------------

Lately I’ve found myself talking with a lot of data intensive companies grappling with this implication of Big Data’s “last mile” problem. Case in point: I recently spoke to a senior executive of a company that specializes in building animatics -- they collect, analyze over a million images/day to build animatic storyboards. Their software scrapes the web for images and an algorithm generates a short description for each image. Their main challenge was that they knew the type of results they would want from the data ( ≥ 98% accurate) but it was computationally difficult to obtain. Their algorithms were highly accurate with tagging images when repeatable patterns were found, but a vast majority of the time image patterns were not ascertainable. Therefore their algorithms were tagging the images inaccurately. To give you an idea, one example was an image of a football displayed with a mis-tag of “Cinderella went to the ball”.

How CloudFactory handles the “last mile” problem of Big Data?

At CloudFactory, our ability to manage the tradeoffs between “accuracy and scale” that is inherent in nature with Big Data processing -- relies on the right mix of human and machine intelligence.

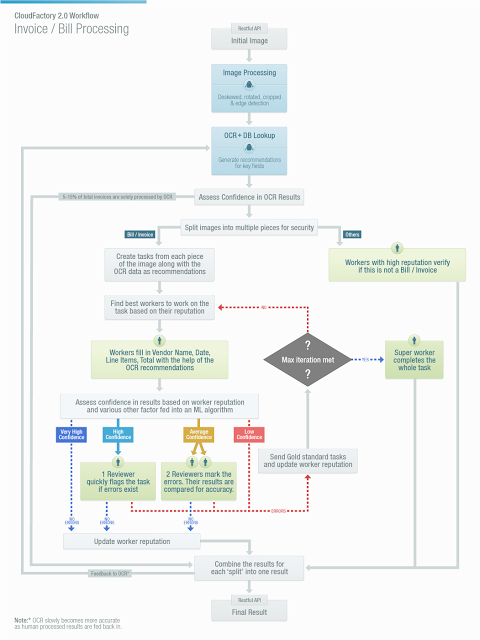

Our machine learning algorithms manage workflows, monitor the reputation of workers and perform quality assurance on each task. While our on-demand and highly scalable workforce can be used to audit or complete specific tasks, verify those done by algorithms or handle the tasks that are difficult for machines alone to accomplish. This combination of effort is the key. Here’s a quick snapshot of a sample CloudFactory 2.0 workflow:

Because Big Data can be so diverse, our platform uses a combination of super cool cognitive science tricks, game theory, data science and human-computer interaction best practices. These give us a greater ability to solve the unique, large-scale challenges associated with Big Data.