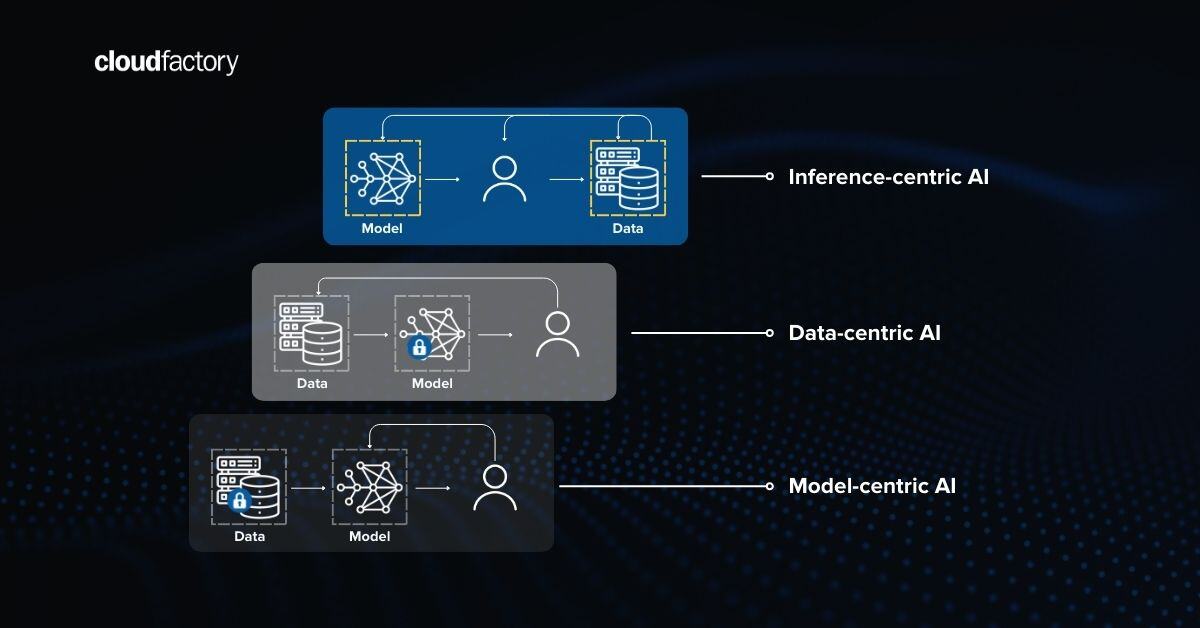

The AI industry has evolved, and developers now understand that the quality of training data can significantly impact a project. However, many AI teams still struggle to ensure their training data is of the highest quality. To create reliable and efficient AI solutions, organizations need to use expert services, cutting-edge technologies, and advanced processes that enable faster deployment with high-quality, structured datasets. This ultimately enhances model accuracy and performance.

The role of high-quality data and precise annotations

One of the cornerstones of success for any AI solution is the quality of the data on which it is trained. For ML engineers, robust, comprehensive, high-quality, and precisely annotated data is essential for developing models that perform accurately, consistently, and efficiently. Such datasets enable AI models to learn effectively, generalize accurately, and perform reliably in real-world scenarios.

Poor-quality data, on the other hand, can lead to inaccurate, overfitted, or underfitted models, wasted resources, and, ultimately, failed projects. Precise annotations provide the context for models to understand and process information correctly, driving better decision-making and enhanced efficiency. At the same time, obtaining better datasets involves a range of comprehensive processes, such as in-depth data analysis, developing specific labeling strategies, smart data curation, state-of-the-art labeling prioritization, and rigorous quality control.

These elements must be addressed to meet the growing demands of AI development.

CloudFactory's AI data services stand in the gap by delivering high-quality, structured datasets, enhancing model accuracy and performance.

Let's explore how.

Dataset analysis

Understanding the quality and distribution of your labeled data is the first step towards improving the dataset. Here, you must identify any edge cases, inconsistencies, or inaccuracies that could impact model performance. This applies both on image and dataset levels, as potential mistakes might lurk in the annotation approach. By thoroughly analyzing your data, we ensure it meets the standards for effective AI training for specific ML use cases.

The dataset analysis service uses advanced techniques such as confident learning, unsupervised learning, clustering, and traditional dataset analysis to verify your data's relevance, distribution, and accuracy. These methods help to uncover hidden patterns and insights, providing a comprehensive view of your dataset's strengths and weaknesses. This ensures that your data is a solid foundation for building robust models.

Data labeling instructions

Clear, comprehensive, precise, and verified data labeling instructions are essential to avoid model confusion and maximize performance. Any ambiguity or inconsistent labeling can lead to significant errors in later stages, such as model training and evaluation. Therefore, having a well-defined labeling strategy is crucial for ensuring that your AI models can accurately interpret and learn from the data.

CloudFactory's AI experts collaborate with you to refine your labeling instructions, including taxonomy and ontology design, annotation type selection, and disambiguation. Our tailored approach meets the specific needs of your project, ensuring that every aspect of your labeling guide is robust and effective. This leads to better dataset and, ultimately, to better model performance.

Data Labeling instructions versioning panel

Data Labeling instructions versioning panel

Data annotation

The CloudFactory’s data annotation service leverages custom-trained models to squeeze every last drop of automation for the annotation process. By incorporating advanced machine learning techniques, the ML team ensures that the annotation process is streamlined while remaining efficient and accurate. This automation significantly reduces the time and effort required for manual annotation, allowing your team to focus on other critical tasks.

We train up to eight AI labeling assistants on your data according to your annotation strategy, retraining them as the project progresses to improve performance continuously. The iterative approach ensures that the models improve over time, adapting to the specific nuances of the data and use case.

Additionally, we implement an unbiased teacher-student model trained on the unlabeled portion of your data to maximize automation potential, ensuring high-quality annotations at scale. This extensive approach guarantees the generated annotations are consistent, reliable, and ready for solid AI training.

Dataset curation

Raw datasets can contain hundreds of thousands of samples to label in the vast sea of information. Dividing noise and data points of the highest relevance reduces development efforts and is crucial for effective data labeling and AI development.

The dataset curation service uses unsupervised learning, vector analysis, and other data exploration techniques to curate and select the most relevant data samples from buckets of unlabeled data. These advanced methods help us sift through large datasets to reduce noise and find the most promising data points. This ensures that your resources are focused on the most useful data for your application, leading to better AI models.

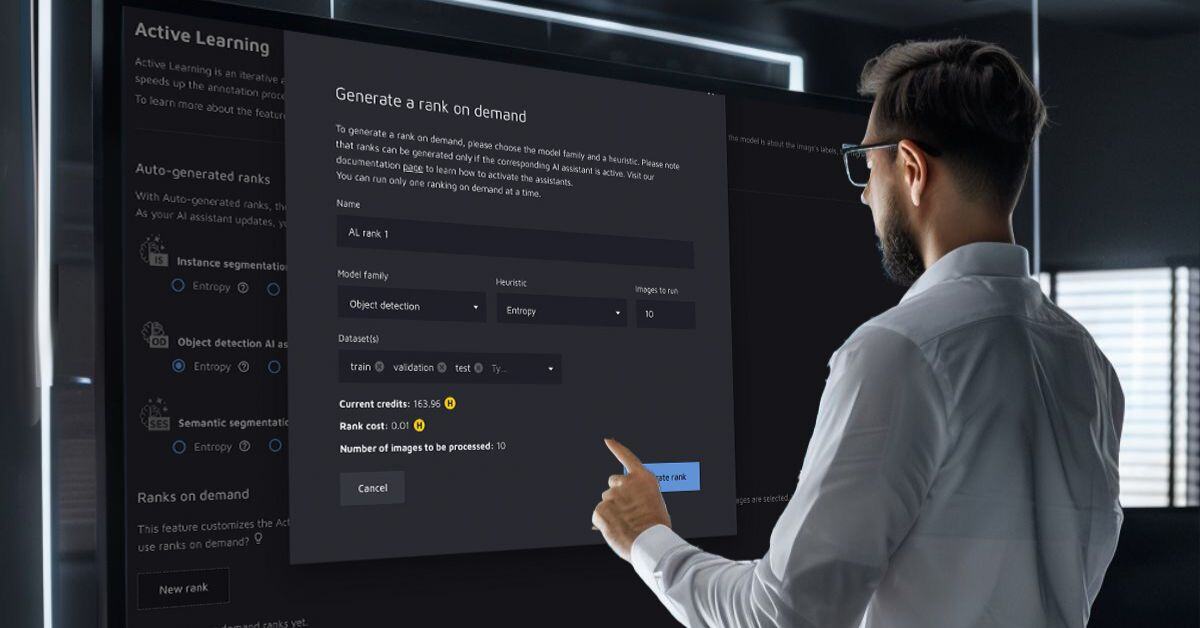

Labeling prioritization

Efficient labeling is key to maintaining a streamlined workflow. Properly prioritized labeling ensures that your team focuses on the most impactful data, saving time and enhancing the overall efficiency of the AI development pipeline.

We use Bayesian optimization with specific models or classes to prioritize your annotation queues. This advanced technique allows the team to continuously refine the priority list, ensuring that the most relevant data is always at the forefront of the labeling process. By dynamically adjusting priorities, we maximize the labeling workforce's effectiveness and improve the quality of labeled datasets, aligning closely with the project's specific needs and objectives.

Quality assurance

Quality assurance is critical to maintaining the integrity of your data. Ensuring that every annotation is accurate and reliable is paramount for developing robust AI models. Without rigorous quality control, the risk of introducing errors and inconsistencies into your dataset increases, compromising model performance.

We implement a consensus scoring approach with confident learning that assesses annotation accuracy and identifies possible errors for the QA team to review. Our comprehensive verification loop enhances data reliability by leveraging multiple models to validate each annotation.

By comparing annotations to what multiple models perceive as the ground truth, we minimize errors and effectively surface ambiguities in ontologies and taxonomies, ensuring the highest quality data for your AI projects. This dedication guarantees that your data remains consistent and of the highest standard, supporting the development of accurate and effective AI solutions.

Annotations review panel

Annotations review panel

Real-world scenarios

A Computer Vision (CV) startup needs dataset evaluation

A CV startup is developing a hockey sports analytics system to track players on the field. They have a labeled dataset and want to use third-party expertise to analyze and evaluate it. The ultimate end result is getting guidance on the current state of the dataset and recommendations for improvement.

Solution: CloudFactory leverages its "Dataset Analysis" and "Quality Assurance" services to address the need.

Dataset analysis

- Data quality verification: Use confident learning and classical dataset analysis techniques to assess the dataset's quality, relevance, and distribution.

- Unsupervised learning: Apply clustering methods to identify patterns and anomalies, ensuring the dataset accurately represents target classes.

- Model training Insights: Train dummy models to provide detailed insights into the dataset's strengths and weaknesses, guiding future data collection and labeling efforts.

Rigorous dataset analysis using confident learning, unsupervised learning, and classical techniques.

Rigorous dataset analysis using confident learning, unsupervised learning, and classical techniques.

Quality assurance

- Annotation accuracy: Employ a consensus scoring approach with confident learning to evaluate annotation accuracy and surface any inconsistencies.

- Error identification: Use multiple models to compare annotations to the perceived ground truth, minimizing errors and identifying ambiguous data points.

- Recommendation report: Deliver a comprehensive report with specific tuning recommendations to enhance the dataset's quality and ensure reliable AI model performance.

Here, we provide the startup with a clear and comprehensive understanding of their current dataset's quality and actionable insights for improvement. The refined dataset would lead to:

- Improved accuracy: Enhanced tracking precision of players within the hockey field.

- Operational efficiency: Streamlined annotation process with reduced errors and rework.

- Reliable performance: Robust AI model capable of delivering consistent and accurate predictions in the target domain.

A Construction company needs to label data from scratch

A large company in the construction field is developing a site security system to track whether builders are following safety measures, such as wearing hard hats. The company has abundant raw data but requires comprehensive guidance to label it. They want to collaborate with CloudFactory to start and execute the data labeling process.

Solution: CloudFactory utilizes "Data Labeling Instructions," "Data Annotation," and "Labeling Prioritization" services to meet the needs of the client:

Data labeling instructions

- Taxonomy and ontology design: Collaborate to design a clear and comprehensive taxonomy and ontology for labeling safety measures.

- Annotation type selection: Define the appropriate annotation types to ensure precise labeling of safety gear, such as hard hats.

- Disambiguation guidelines: Provide detailed guidelines to avoid confusion and ensure consistency across the annotation strategy.

Data annotation

- Custom-trained models: Use custom-trained AI models to automate the initial annotation process.

- Iterative model training: Use continuously retrained models to improve annotation accuracy and efficiency.

- Large-scale annotation: Handle large volumes of raw data, ensuring comprehensive and accurate labeling to meet the company’s needs.

Labeling prioritization

- Bayesian optimization: Utilize state-of-the-art technologies to prioritize the most critical data for labeling, ensuring efficient use of resources.

- Dynamic adjustment: Recalculate priorities iteratively as the project progresses to keep the focus on the most relevant and impactful data points.

- Efficient workflow: Maintain a streamlined and efficient workflow, reducing the time required to label essential data.

In this case, we enable the construction company to obtain a meticulously labeled dataset, enhancing their site security system's accuracy and effectiveness. The high-quality data would be a solid foundation to:

- Improved safety monitoring: Enhanced ability to track and ensure compliance with safety measures on construction sites.

- Reliable performance: Robust AI models capable of accurately identifying safety compliance, leading to a safer work environment.

Need accurate datasets for peak model performance?

CloudFactory's AI data services provide a comprehensive solution for optimizing AI initiatives to ensure high accuracy throughout the data lifecycle. With the help of expert services and a powerful AI data platform, you can gather, curate, label, and refine data to enhance your pre-trained AI models.

Achieve faster deployment with confidence, knowing that your AI models are built on a strong foundation of accurate and reliable data—whether you're a startup looking to assess and refine existing datasets or a large corporation initiating a new labeling project.

Data Labeling AI & Machine Learning Data Annotation AI Data Platform AI Data