As you know by now, the success of your machine learning (ML) models rests heavily on the quality of your training data. To ensure your AI solutions learn effectively and produce accurate results, meticulous data annotation and rigorous quality assurance are paramount.

Consider a full-stack data labeling solution that integrates cutting-edge software, AI-powered automation, and skilled human annotators when weighing your options. This comprehensive approach ensures speed, accuracy, and adaptability to handle complex labeling tasks. AI tools excel at repetitive and well-defined tasks, while human experts provide nuance and contextual understanding.

AI-powered labeling and expert annotators yield the highest quality training data to fuel robust ML models. Optimizing human-tech collaboration drives performance gains. Consider machines as partners to supercharge innovation and performance.

Now, let's dig deeper into the steps you’ll need to take when selecting the right data labeling tools to streamline processes, maximize data quality, optimize workforce investment, and propel your ML initiatives forward in 2024 and beyond.

- Evaluate your company's stage in the AI lifecycle

- Align with your automation level

- Examine tool capabilities and fit

- Compare the benefits of build vs. buy

- Beware of contract commitment and tool limitations

Evaluate your company's stage in the AI lifecycle

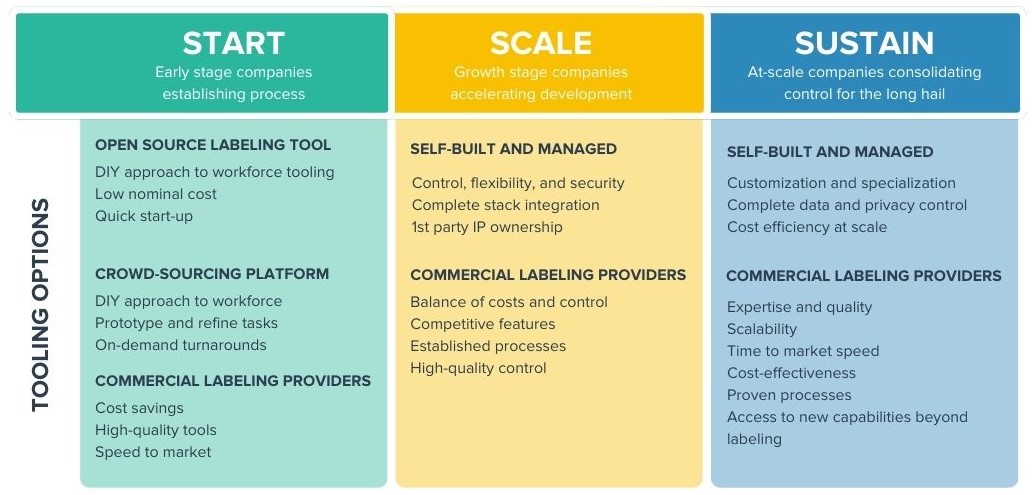

Your organization's current size and the sophistication of your AI processes will heavily influence the best ML data annotation tool. Consider these approaches for each stage:

Getting started

For smaller organizations or those new to the labeling process, commercially available tools offer ease of use and a streamlined setup. If crowdsourcing is necessary, be aware that the anonymous nature of crowdsourced workforces can sometimes lead to data quality challenges and understanding your project's specific context. Your best bet will always be a professionally managed and expert data labeling workforce.

Scaling up

As your company grows, commercially available tools with light customization options become a strong choice. This approach allows you to adapt features to your specific needs without requiring large development teams. Additionally, seek out data annotation providers that leverage AI-powered acceleration. Such tools can rapidly scale your training datasets alongside your company's growth, resulting in faster and more accurate annotation results.

Sustaining scale

Once you're operating at a large scale, consider highly customizable commercial tools that minimize the need for extensive in-house development resources. Partnering with data labeling companies that use active management is crucial at this stage. Active management ensures that the quality of your labeled data is consistently monitored and that feedback loops are in place to improve the work of data labelers or refine processes over time. If you prefer open-source solutions, invest in planning and creating robust integrations to guarantee long-term success and fully reap the benefits of flexibility and security offered by open-source options.

Align with your automation level

Modern ML solution development focuses on streamlining workflows through automation, saving time, reducing errors, and minimizing costs. This makes automation a key feature to seek in an annotation tool.

There are two main approaches to ML annotation: pre-labeling and human-in-the-loop annotation.

Pre-labeling

Pre-labeling uses algorithms or pre-trained models for automatic data labeling. This offers speed and budget efficiency for large, simple datasets but might lack accuracy for complex tasks.

Here’s a scenario on streamlining vehicle image classification with pre-labeling:

Imagine a company aiming to build an ML model to identify and classify various vehicles in images accurately. However, they face a key challenge: their massive image dataset is raw, making it unusable for training their model.

To address this, they turn to pre-labeling. A pre-trained image recognition model, already capable of identifying basic vehicle types (cars, trucks, vans, motorcycles), is used to label their dataset automatically. This provides the company with a quickly labeled dataset, allowing it to jumpstart the training of its specialized vehicle identification model.

Pre-labeling can be a powerful tool for ML, but it has the potential for inaccuracy and inefficiency.

Human-in-the-loop Annotation

On the other hand, human-in-the-loop annotation combines pre-labeling with human expertise for increased accuracy and nuanced tasks.

Returning to our example, the pre-trained model, designed for general image recognition, might mislabel a van as a truck or struggle to distinguish between similar car models like a sedan and a sports car. To ensure accuracy for finer distinctions (specific car models, rare vehicles), the company will likely need to incorporate human verification or additional labeling efforts.

In any case, the scale of the annotation automation might vary. At the moment, the computer vision industry views it on three levels:

- Level 1: annotate a single object in a single image in a matter of seconds;

- Level 2: annotate an entire image in a matter of seconds;

- Level 3: annotate a large batch of images in a matter of minutes;

Not all the data labeling tools support each automation level—it requires certain skills, architecture, and infrastructure to make it work under the same system. Also, consider quality control; modern technologies allow semi-automating the process, so already implementing it in a tool is a massive advantage.

The best data labeling tools maximize automation throughout the workflow, including semi- or fully automated annotation and AI-powered quality control.

Examine tool capabilities and fit

Another pivotal factor to consider in an ML data labeling tool is its capabilities. Regardless of pricing, in the end, the tool must help you solve your task in the most optimal way possible while offering all the necessary support, sideways, and scalability options.

Therefore, the critical considerations in this step are:

- Highly precise annotations and quality control: Ensure the tool provides accurate labeling instruments and includes mechanisms for quality control to detect and correct errors.

- Annotation types: Assess whether the tool supports various annotation types, such as bounding boxes, masks, polygons, keypoints, etc., based on the requirements of your project and ML task.

- Annotator’s experience: Evaluate the tool's user interface for ease of use, intuitiveness, and efficiency in completing labeling tasks.

- Flexibility: See whether a tool allows customization of labeling workflows, label types (f.e. From polygon to mask and backwards), and interface configurations to suit specific project needs.

- Inter-team collaboration: Consider tools that facilitate collaboration among team members through user permissions, commenting, real-time annotation, task assignment, and consensus scoring.

- Scalability: Assess the tool's ability to handle large datasets and adapt to your future growth. Switching tools can be costly, so choosing a platform that scales alongside your needs is necessary.

- Automation and AI integration: Explore the tool’s options for automating labeling tasks through AI-assisted labeling features, which streamline the development and reduce manual effort.

- Data security and privacy: Prioritize platforms with robust data security measures and ensure the tool complies with relevant regulatory requirements, especially in industries with sensitive data, strict data governance, and compliance standards.

- Integration with other platforms: Determine whether the tool integrates seamlessly with your existing data management platforms.

- Annotation consistency: Verify the tool's ability to maintain consistency in annotations across different annotators and labeling sessions.

- Support for various formats: Ensure the tool supports the formats you need for easy integration into your ML pipeline. This applies to data, annotations, ML models, etc.

- Customer support and training: Assess the availability and quality of customer support services, including documentation, tutorials, training resources, and knowledge hubs.

- Offline labeling support: If offline labeling is required, ensure the tool offers offline functionality and synchronization capabilities.

- Review and feedback: Assess whether the tool supports features that enable annotation review and feedback loops to iteratively improve labeling quality.

- Additional features: Evaluate any extra capabilities (e.g., active learning, quality control) that streamline your process.

Ultimately, you’ll want the most powerful and tailored solution for your annotation needs. For example, if your work centers on computer vision, prioritize tools specializing in that domain, as they often offer more advanced and relevant features.

Compare the benefits of build vs. buy

While building your own annotation tool can provide the greatest flexibility, customization, and control, consider this path only if it genuinely enhances your unique value offering to customers.

Otherwise, purchasing a commercially available solution is a better long-term strategic choice.

- Buying a tool often leads to lower costs, allowing you to direct resources toward other critical areas of your ML project. You'll benefit from tailored configuration options and dedicated user support. The rapid advancement of data annotation tools means multiple strong commercial options exist for any ML domain.

- Choosing a commercial annotation tool means you benefit from a dedicated development team focused on continuous tool improvement, feature expansion, and expertise in data annotation workflows. Outsourcing these tasks frees your in-house developers from the burden of scaling or specializing their annotation capabilities, given how rapidly ML technologies advance.

- Commercial platforms allow you to select the best tool for each specific use case and switch tools as your needs change. This flexibility provides adaptability for different workloads and ensures data portability, avoiding the potential lock-in of in-house development.

- By outsourcing annotation tooling, your internal team can fully prioritize developing your core product's unique features and competitive advantages. The approach helps you avoid the opportunity cost and potential for technical debt that comes with building and maintaining an in-house annotation solution.

Beware of contract commitment and tool limitations

Cost is inevitably a major factor when choosing an ML data labeling tool. Pricing models for these tools can vary widely, impacting your project's budget and flexibility.

Here's what you need to know:

- Pay-per-annotation: Charges are based on the number of annotations created.

- Subscriptions (monthly or annual): A fixed cost for a set number of annotations within a given time frame.

- One-time fee: Unlimited access, but ongoing support or updates may be needed.

- Pay-as-you-go: Payment for specific features as needed.

These are standard data labeling pricing models; you might encounter a few others with varying contract terms.

It all boils down to understanding and comparing contract commitments across tools. Some models may lock you into long-term subscriptions or have hidden fees. Carefully evaluate pricing against your project's scope and budget. Of course, you want to pay the right amount for annotation features you don't need. Still, you need to ensure the tool provides the accuracy, efficiency, and flexibility necessary to power your AI initiatives.

Another important consideration is the potential tool’s limitations. These are not always about the capabilities, supported features, or variety of options. It’s a more foundational question of restrictions a tool might have that significantly influence your project’s requirements and development pipeline. For instance, such a limitation might be in the form of gating your annotated data within a tool without the ability to export it or accepting any existing annotations in a particular self-made format only.

If not identified on the shore, such obstacles might catch you off guard, resulting in delays, hidden costs, or even failure. Having an open and transparent discussion with the tool owner going through each step of the potential pipeline is the key to preventing such disasters.

Focus on finding a solution that provides the best value for your needs, considering the contract's annotation pricing, long-term implications, and other limitations.

Benefit from a full-stack AI-powered labeling + workforce platform

When it comes to labeling tools for ML, many companies have found that CloudFactory's Accelerated Annotation platform is uniquely positioned to streamline their workflow and deliver high-quality data their models demand:

- Active learning: Prioritize the most impactful images for labeling, ensuring your models learn from the most valuable data.

- AI Consensus Scoring: Eliminates errors through rigorous quality control, guaranteeing your models train on the highest quality data.

- Adaptive AI assistance: Leverages AI models that continuously adapt to your data, boosting labeling speed and accuracy.

- Critical insights: Provide proactive feedback on your models' potential weaknesses, helping you refine your training data for optimal performance.

- Human expertise: Tap into a highly skilled, managed data labeling workforce with extensive Vision AI experience, ensuring complex, nuanced tasks are handled precisely.

If you are considering engaging a data labeling service to free up your internal teams and want to choose the right provider, process, and technology to streamline the process, read our resource: Mastering data labeling for ML in 2024: A comprehensive guide.

Data Labeling Labeling Tool Data Annotation Tools Data Annotation