Computer vision trains machines to interpret and understand the visual world around them. As one of the fastest growing applications of artificial intelligence, it is being deployed across a wide range of industries and use cases to solve problems.

In healthcare, computer vision supports faster, more accurate diagnoses. In transportation, it informs the movements of autonomous vehicles. In banking and finance, it verifies the authenticity of identification cards and documents. These are just a few of the ways computer vision is changing our world.

However, none of these impressive abilities are possible without image annotation. A form of data labeling, image annotation involves labeling parts of an image to give the AI model the information it needs to understand it. That’s how, for example, a driverless car can interpret and observe road signs and traffic lights, as well as steer clear of pedestrians.

To annotate images and prepare them for training your AI model, you need a sufficiently large visual dataset and people to annotate them. Common image annotation techniques include drawing bounding boxes around objects and using lines and polygons to demarcate target objects.

There are many myths and misconceptions about AI. Over the last decade, CloudFactory has provided professionally managed teams to annotate images with high accuracy for machine learning applications. Here are a few of the common myths we dispel in our work to label the data that powers AI systems:

Myth 1: AI can annotate images as well as humans.

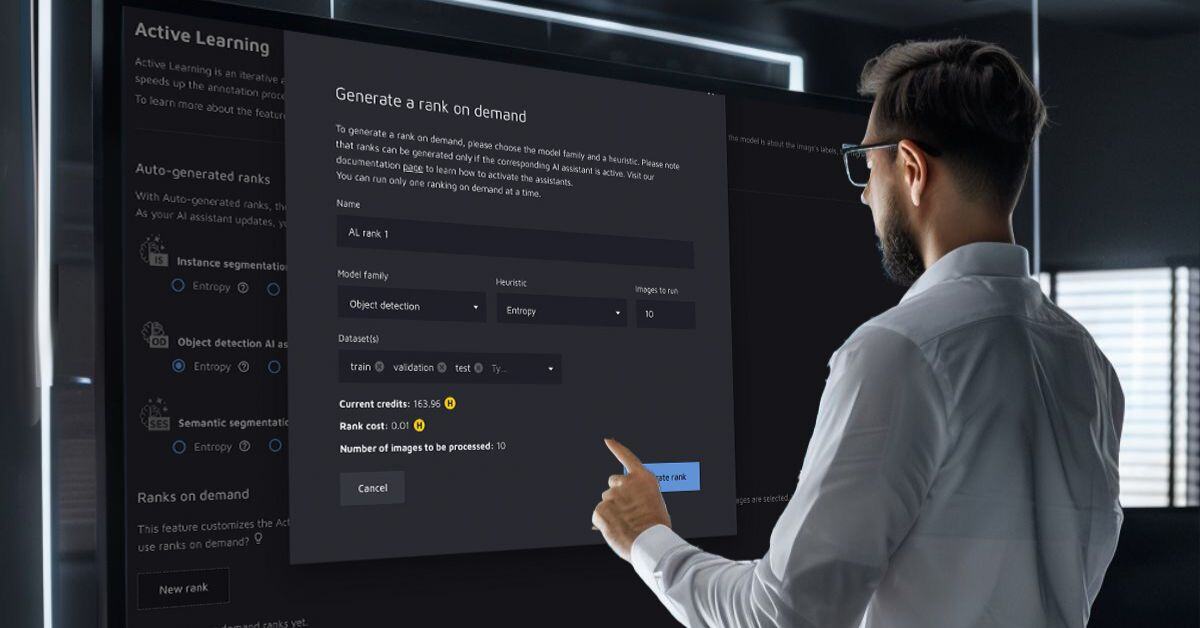

Automated image labeling tools are evolving fast. Automation, with humans in the loop, can save time and money by pre-annotating visual data sets. However, these benefits come at a significant cost. For example, a poorly supervised learning process can perpetuate errors, which lead to the model becoming progressively less accurate over time - a concept known as AI drift.

Although auto labeling is much faster, it lacks accuracy. Since computer vision is, for the most part, meant to interpret things as humans do, it stands to reason that image annotation still demands human expertise as well.

Myth 2: It doesn’t matter if annotation is off by a pixel.

It’s easy to think of a single pixel as nothing more than a dot on the screen, but when it comes to using data to power computer vision, even a minor inaccuracy in image annotation can lead to serious consequences. For example, the quality of annotations on a medical CT scan used to train machines to diagnose disease can mean the difference between the right or wrong diagnosis. In an autonomous vehicle, a single mistake during the training phase can mean the difference between life and death.

While not every computer vision model predicts life or death situations, accuracy during the labeling phase has a significant long-term effect. Low-quality annotated data can cause problems twice: first, during model training and again when your model uses the annotated data to make future predictions. To build high-performing computer vision models, you must train them using data annotated with high accuracy.

Myth 3: It’s easy to handle image annotation in-house.

You might think of image annotation as nothing more than a repetitive, easy task. After all, it doesn’t demand any expertise in artificial intelligence. But that doesn’t mean you should do the work in-house. Image annotation requires training, access to the right tools, knowledge of your business rules and how to handle edge cases, and quality control. It’s also very expensive to have your data scientists handle the labeling. Scaling in-house teams is difficult due to the often tedious and repetitive nature of the work, which can lead to employee churn. And you will bear the burden of onboarding, training, and managing members of the annotation team.

Choosing the right people to annotate your data for computer vision is one of the most important decisions you will make. To annotate high volumes of data at scale over extended periods of time, it’s best to use a managed, outsourced team. Direct communication with that team is helpful for making adjustments to your annotation process along the way, as you test and train your model.

Myth 4: Crowdsourcing can be used for image annotation at scale.

Crowdsourcing gives you access to a large number of workers at the same time. But there are drawbacks to this approach that make it difficult to use it for annotation at scale. Crowdsourcing uses anonymous workers, and the workers change over time, which means that they’re less accountable for quality. Moreover, you can’t leverage the benefits that come with workers’ becoming more familiar with your domain, use case, and annotation rules over time.

Another disadvantage of crowdsourced workers is that this approach typically uses the consensus model to measure quality annotations. That means several people are assigned to do the same task, and the correct answer is the one that comes back from the majority of workers. So, you pay to have the same task done several times.

Crowdsourcing might make sense when you are completing a one-time project or testing a proof of concept for your model. Managed, outsourced teams are a better option for longer-term annotation projects that require high accuracy.

The bottom line

Using images annotated with poor quality to train a computer vision model can backfire. Low-quality annotations impact your model training and validation process – as well as the decision-making capabilities of your model, as it learns from those annotations to make decisions in the future. By working with the right workforce partner, you can ensure higher quality annotations and, ultimately, better performance for your computer vision model.

Learn more about image annotation in our guide, Image Annotation for Computer Vision.

Computer Vision Image Annotation AI & Machine Learning Transportation