AI is developing rapidly, and all of its subfields are keeping up the pace, bringing new advancements and technologies to meet the ever-growing market demands.

Data annotation automation is one of the hottest technical topics for us right now, and we have covered it extensively in our white paper about top computer vision model building, annotation acceleration, and automated labeling approaches.

Also known as auto labeling or pre-labeling, this technology can potentially revolutionize how we label data for AI/ML models. While the approach isn’t a brand-new innovation in the data annotation market, it has developed over the years into the industry's gold standard, and there’s plenty of room for growth.

This post outlines what your ML team needs to know about auto labeling to increase automation efficiency and accuracy. In this post, we'll cover:

- What’s auto labeling?

- Top benefits of auto labeling

- The limitations of auto labeling

- Making the most of auto labeling for ML

You may also be interested in the key decision points your team needs to make when creating their automation strategy by reading our recent blog post on the four layers of automated labeling for faster AI goals.

What’s auto labeling?

Automated labeling uses ML algorithms or pre-existing models to generate annotations automatically. Such an approach augments the work of humans in the loop, saving time and money on the data annotation step of the ML pipeline. The auto labeling process involves the following steps:

- Apply an algorithm or a model to raw data.

- Get the predicted labels for the whole batch of data.

- You may (or may not) pass the data and annotations to a human workforce for quality assurance.

Most labeling tools today support automated labeling in either of these two ways:

- Tools that help you with annotations but do not help you with models. Such solutions need internal support for steps 1 and 2 of the automated data labeling process. However, they offer to upload pre-labeled data into their system for further analysis, so their focus is step 3.

- Platforms that help you both with annotations and ML models. Such tools offer complete support for the whole auto labeling process.

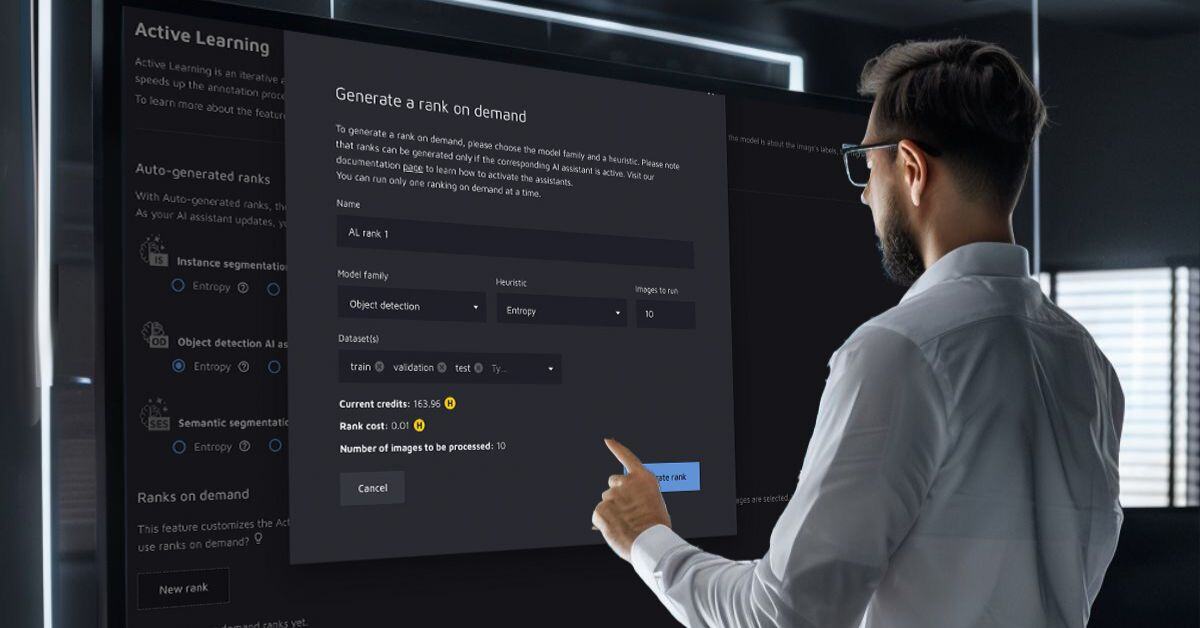

CloudFactory’s internal data annotation platform has auto labeling capabilities that support our data analysts throughout the workflow and offer custom-built prediction models. The platform also implements an embedded neural network that can learn from every annotation made, adjusting to any use case on the fly. Our data analysts upload pre-labeled data and skip steps 1 and 2 if needed and this saves us time and resources and improves our clients' data annotation workflows.

Auto labeling may seem like the dream workflow—you get the data, pass it through a black-box type of model, obtain the result, adjust as needed, and proceed with building your ML solution.

However, every labeling approach has its drawbacks, and pre-labeling is no exception. Let’s go through the benefits and limitations step by step.

Top benefits of auto labeling

From our experience, the top benefits of auto labeling are:

- Resource efficiency: Without a doubt, automated data labeling can massively speed up the annotation process. Significant automation capabilities make labeling more cost-, time-, and labor-efficient.

- Annotation consistency: Consistent algorithms mainly perform automated data labeling—their rules are applied uniformly to all data assets, enabling you to build better ML solutions.

- Scalability: Auto labeling ensures your annotation workflow is scalable and can handle large volumes of data.

- Agile approach: Auto labeling allows you to perform data labeling in quick iterations and be truly agile. For example, adding a new class to your annotation taxonomy will be less painful with auto labeling compared to more conventional approaches. Also, with better models, labels can be updated quickly with high quality, enabling streamlined annotation cycles.

- Independence and security: You do not necessarily need complex annotation tools or platforms to perform auto labeling. Generating labels can be done in your system, which guarantees the security of your intellectual property (i.e. your auto labeling model).

Over the past decade, CloudFactory has combined auto labeling with human expertise to scale AI projects in various industries.

Here are our main findings:

- The primary application of automated labeling is pre-annotating some or all of your data. Then, you bring in expert annotators to review, adjust (if needed), and complete the annotations. Maintaining the human factor is essential as the auto labeling model is just an algorithm that does not guarantee 100% accuracy as there will always be exceptions and edge cases. But even so, as a result, you reduce the human labor needed to produce labels while ensuring that your annotations are of high quality.

- Another approach is possible when you have high confidence in your auto labeling model's predictions. You can ask the model to assign a confidence level to each annotation based on the use case, task difficulty, and other factors, allowing you to reduce human labor further, as data labelers can focus on the annotations with the lowest confidence levels.

As for the time savings, we have run several experiments in various scenarios and found out that:

- If an auto labeling model provides low-quality predictions, it increases the time needed for an annotation task. It is easier to create a label from scratch than adjust an existing one, even when using an intuitive annotation tool.

- If an auto labeling model is satisfactory from scratch and learns over time based on produced annotations, then the time improvements can be massive. In our findings, the exact numbers varied from 10% to 50% less time needed to complete the annotation job while maintaining high quality.

The limitations of auto labeling

On a sour note, the drawbacks of auto labeling are:

- Quality: Auto labeling is done mainly by models that might produce highly inaccurate labels and lack generalization capabilities. Therefore, auto labeling is only possible with manual QC for identifying and adjusting mistakes, even if you are sure your model is good.

- Application area: Auto labeling is helpful for use cases that are relatively uncomplicated and easy to label, such as traditional classification tasks. Problems that require human genes, extrapolation capabilities, domain expertise, and contextual familiarity are not a good fit for fully automated labeling.

- Vulnerability to data shifts: Auto labeling needs a lot of training data. If a data shift occurs, the model risks underperformance and may result in unplanned costs and the need for additional human intervention.

- Use case dependency: Auto labeling is use-case-dependent, meaning you need a precise evaluation of your domain, data type, exact task, etc., before opting for auto labeling. For example, we do not recommend out-of-the-box automated data labeling options for complex spatial tasks like detecting small objects on gigantic 5000x5000 pixels images; it's better to have experts in the loop in this case. However, auto labeling might be an excellent fit if UAV collects your data and is of standard HD format.

Making the most of auto labeling for ML

For auto labeling to help you enhance your workflows, save time and resources, and achieve your AI/ML goals faster, you need to be willing to iterate and maintain an expert human touch.

That’s where CloudFactory’s industry-leading Accelerated Annotation comes in.

Accelerated Annotation offers 5x faster labeling speed and is powered by adaptive AI assistance, critical insights, deep expertise, and a proven workflow so you can achieve the quality and speed needed to accelerate AI/ML initiatives.

If you're building your annotation strategy and need greater detail into the most effective automated labeling strategies, download our comprehensive white paper: Accelerated Data Labeling: A Comprehensive Review of Automated Techniques.