You're a brick-and-mortar retail innovator. You see the writing on the wall—self-service checkout is here, and autonomous checkout is coming. You need to jump into the game. And scale quickly.

Innovative retailers and retail providers make the leap for clear reasons: keeping the lights on despite staff shortages, increasing shopper foot traffic, elevating the customer experience, and maintaining high store loyalty. On top of that, contactless or frictionless checkout — other names for autonomous checkout — breathes new life into stores and ensures that retail employees get to do more meaningful work, like creating product displays, walking the floor to help customers, and overseeing the vibrance and operations of the store.

But behind the scenes, creating and refining an autonomous checkout solution is no easy feat. Building computer vision machine learning models for autonomous checkout requires obscene amounts of image and video training data. And although data annotation sounds easy (just put a bounding box around an image, right?), it’s not usually the case.

One of our clients in the retail space, a leader in autonomous checkout, explains how the work adds up:

“Using raw footage from cameras, our annotators have to label each person with a unique ID and all body parts on each person. It’s not an easy crop. It’s work that takes minutes per task instead of seconds.”

Have you ever segmented the human form with an annotation tool? It takes time. And, even when you’ve established methods for annotating images and videos accurately, precisely, and efficiently, two hurdles always pop up:

- Quickly scaling training data annotations is hard — especially while holding on to quality.

- You’re going to need a data labeling, or annotation, workforce to do it.

The keyword there is quickly: It’s one thing to want to implement autonomous checkout. It’s another to actually get it up and running successfully — and generating revenues that far exceed costs.

Read on to discover three workforce management options for scaling fast and what it takes, in our experience, to do so while preserving quality.

Scaling autonomous checkout training data

Depending on the tech you’re building—whether smart cart, smart basket, or grab-and-go technology—making autonomous checkout a reality requires an initial load of well-annotated training data so your algorithm can accurately identify products, people, and the position of nearly everything in the store. In a well-done 25-minute video, an innovative company in this space, Grabango, covers the inherent retail vision challenges and opportunities you may face.

Another thing: Companies plunging into autonomous checkout are often working with tight deadlines and against logistical challenges:

- Retrofitting an existing store with a grab-and-go system often occurs over several late nights so that the installation process won’t impact foot traffic and sales. (Another innovator in this space, Zippin, gets it done in one or two days.)

- Adding a smart cart or smart basket system requires inventorying, photographing, or filming all existing products or SKUs to be fed to an ML algorithm.

- You also need to implement and integrate methods for customers to access the autonomous checkout system and pay for their goods. Will shoppers use a mobile app system, integrate with existing POS systems (like Caper’s plug-and-plays), a credit card system, a facial recognition system (gasp!), or something else?

- And then you have to test everything and iron out the kinks quickly!

After you solve the initial logistical challenges, you need to test your machine learning (ML) model in the live environment — some companies use a soft launch, while others prefer to keep operations moving and work out the quirks as they go.

Either way, your production model has to be in great working order quickly, which is why training data for your ML model has to be labeled quickly.

All the while, the clock is ticking. Getting started and scaling data annotation projects quickly is imperative — you don’t want to negatively impact the bottom line with ML model project delays. You might have anywhere from a few days to a few weeks to get a model trained and into production.

And it’s not over after the initial build stage. Once the initial training data is labeled, the model needs to be refined over time. Edge cases and exceptions need to be dealt with. And, of course, new products you’re adding to your lineup have to be labeled, too. And this has to happen for each store, use case, or change in products. The model continually needs to ingest newly labeled training data, throttling your labeling capacity. In effect, you’re creating models for each store, and constantly feeding the algorithm beast — those algorithms are as hungry as dads in the grocery store at five o’clock on a Friday evening because they forgot to do the shopping.

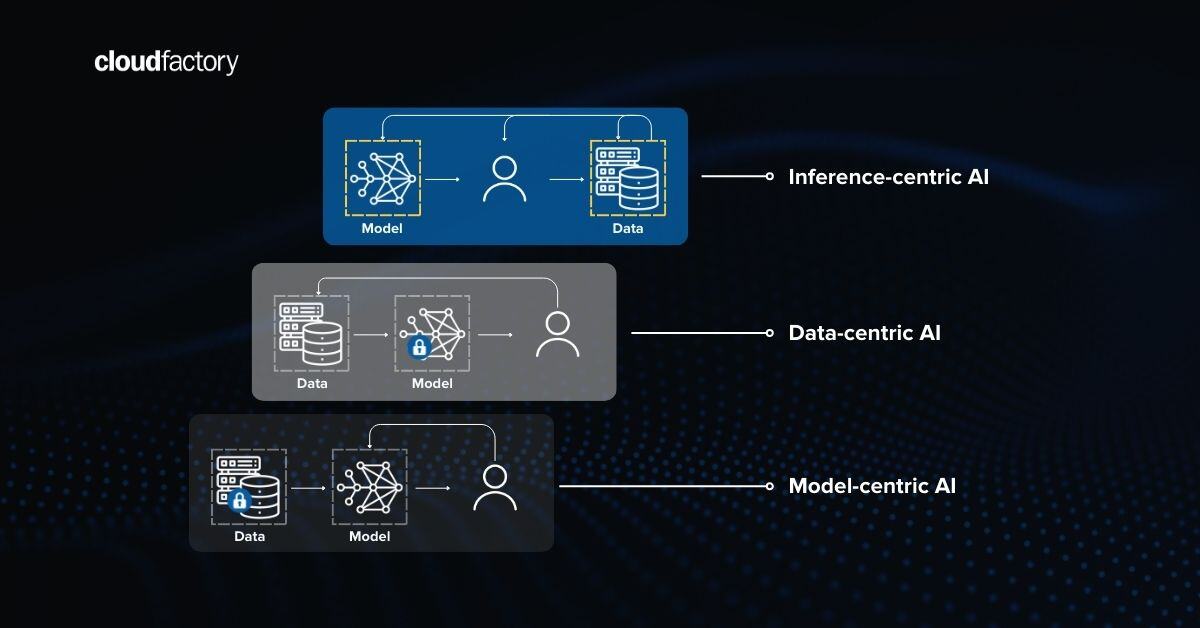

Data is fed into every step of the model development lifecycle, from the initial design and build to deployment and operationalization, and then onward to model refinement and optimization.

Check out this collection of AI model development resources for guidance on each of those stages.

Now, back to the main question:

How do you rapidly scale data labeling without sacrificing quality?

You have a range of options for starting and scaling autonomous checkout data annotation projects. Let’s take a look at each.

Scaling data annotation with internal teams: High quality, but slow

Because of the intensity and quantity of the tasks involved, high-quality annotations with internal teams tend to scale slowly (or not at all). On the plus side, you can closely monitor internal teams and will always know the status of projects. On the minus side, internal teams take time to build and manage. You might have to hire people with the right data annotation skills, or pull in and train employees from other areas. If you do hire new data annotators, they may find themselves with idle time after the initial ramp-up and during lulls in the AI lifecycle. And if you need to up the annotation volume for new use cases, you may find yourself in the same predicament, as your last round of hires have capacity for only a finite amount of additional work.

Scaling with crowdsourced teams: Fast, but low quality

Crowdsourcing — or using large numbers of anonymous people to complete data annotation tasks — can help you scale quickly, but expect low-quality annotations in need of reworking. Several services exist to help you start and scale crowdsourcing projects cheaply and quickly. That’s the good side. On the bad side, the crowdsourced people labeling your data are essentially anonymous. Who’s doing what may change from day to day. And you won’t know if workers are being treated fairly. But the most significant drawback is that crowdsourced workers are paid per task, which explicitly incentivizes them to focus on speed, often at the expense of quality. And as we discussed in a recent post, poorly annotated images and videos for retail AI use cases give rise to additional risks and costs. When crowdsourcing is good it is very, very good; but when it is bad, it is awful. In the case of retail AI, chances are you don’t want to go there.

Scaling with managed workforces: Fast, with high quality

Managed workforces can help you scale quickly and get to market successfully with the highest quality annotations. In fact, because annotators must meet or exceed strict quality standards, you get the same (or better) quality than you would from internal teams — without the burden of team management. Just like internal teams, assigned annotators become experts on your stores, use cases, and products, enabling you to avoid AI “drift,” which happens when the original context changes but the model doesn’t. This is a real concern for production models that aren’t consistently maintained and retrained, but also when you use the same foundational model in totally different contexts (think: airport kiosk vs mall store vs baseball apparel shop). But when you’re in partnership with workforce providers, your annotators are always ready to update training data whenever you deploy an autonomous checkout solution to a new store, add products, or when edge cases inevitably show up — like when a cute, fuzzy dog runs into the store, or an un-annotated wheelchair shows up and confuses the model. Managed workforces also support the entire AI lifecycle, giving you the flexibility to modify the scope of projects as your needs change. This flexibility will help you control costs as projects scale, something that’s hard to do and can quickly become prohibitively expensive when scaling data labeling projects with in-house teams or the crowdsourcing model.

A little bit about CloudFactory

Speaking of managed workforces, CloudFactory is ready to help you start and quickly scale data annotation for retail computer vision initiatives like autonomous checkout.

One of our clients, a leading autonomous checkout company, had this to say about our ability to scale at speed:

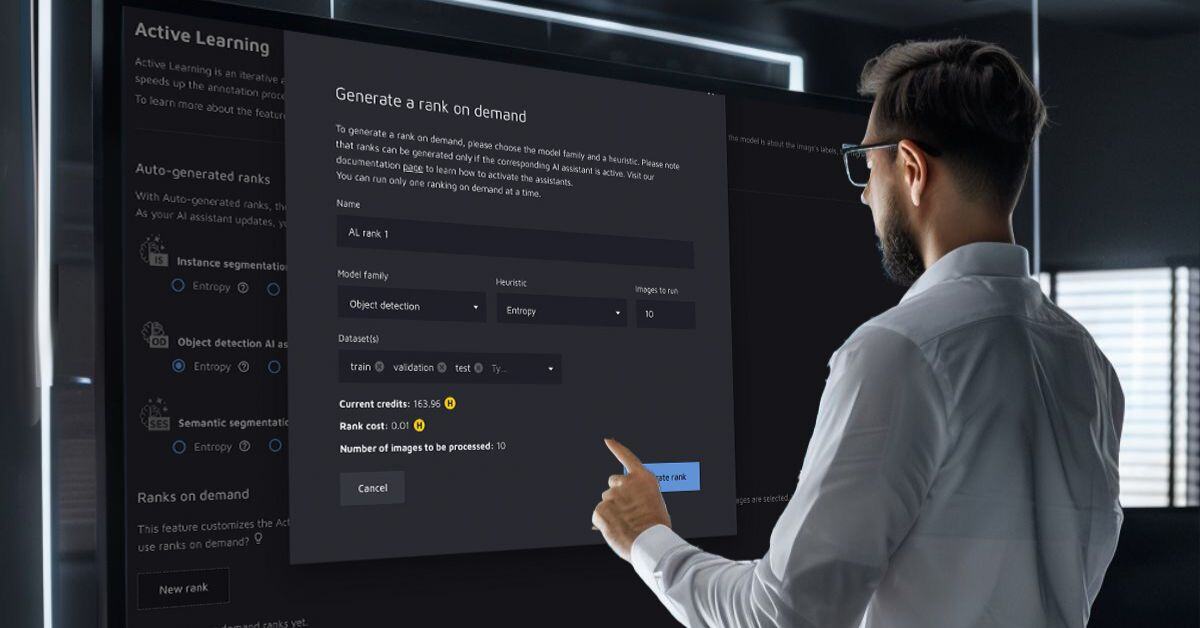

“The speed and scalability of the annotation teams let us quickly move forward as we open different stores. Every time we open a store, we need work done with pose annotations. Sometimes there could be four stores opening at once. We needed a team to have the flexibility to scale, could handle Dataloop, learn our different use cases, and handle the use cases quite well as they popped up.”

And as the same client attests, we help you scale with speed while keeping a focus on quality:

“CloudFactory does a really good job with quality. With the previous vendor, there were so many mistakes that we wondered whether to just throw it out and have it redone, which is ultimately what happened.”

Interested in a broader understanding of CloudFactory’s capabilities? We recommend learning more about our:

Video Annotation Computer Vision Image Annotation AI & Machine Learning Retail